Philosophy of science

The philosophy of science, a sub-branch of epistemology, is the branch of philosophy that studies the philosophical assumptions, foundations, and implications of science, including the natural sciences such as physics, chemistry, and biology, the social sciences such as psychology, history, and sociology, and sometimesâespecially beginning about the second decade of the twentieth centuryâthe formal sciences, such as logic, mathematics, set theory, and proof theory. In this last respect, the philosophy of science is often closely related to philosophy of language, philosophy of mathematics, and to formal systems of logic and formal languages. The twentieth century witnessed a proliferation of research and literature on the philosophy of science. Debate is robust amongst philosophers of science and within the discipline much remains inconclusive. For nearly every assertion advanced in the discipline, a philosopher can be found who will disagree with it in some fashion.

Questions Addressed by Philosophy of Science

Philosophy of science investigates and seeks to explain such questions as:

- What is science? Is there one thing that constitutes science, or are there many different kinds or fields of inquiry that are different but are nevertheless called sciences?

- Does or can science lead to certainty?

- How is genuine or true science to be distinguishedâdemarcated, to use the usual philosopher's termâfrom non-science or pseudo-science? Or is this impossible, and, if so, what does this do for the claims that some things are pseudosciences?

- What is the nature of scientific statements, concepts, and conclusions; how are they are created; and how are they justified (if justification is indeed possible)?

- Is there any such thing as a scientific method? If there is, what are the types of reasoning used to arrive at conclusions and the formulation of it, and is there any limit to this method or methods?

- Is the growth of science cumulative or revolutionary?

- For a new scientific theory, can one say it is ânearer to the truth,â and, if so, how? Does science make progress, in some sense of that term, or does it merely change? If it does make progress, how is progress determined and measured?

- What means should be used for determining the acceptability, validity, or truthfulness of statements in science, i.e. is objectivity possible, and how can it be achieved?

- How does science explain, predict and, through technology, harness nature?

- What are the implications of scientific methods and models for the larger society, including for the sciences themselves?

- What is the relationship, if any, between science and religion and science and ethics, or are these completely separate?

Those questions may always have existed in some form, but they came to the fore in Western philosophy after the coming of what has been called the scientific revolution, and they became especially central and much-discussed in the twentieth century, when philosophy of science became a self-conscious and highly investigated discipline.

It must be noted that, despite what some scientists or other people may say or think, all science is philosophy-embedded. Philosopher Daniel Dennett has written, âThere is no such thing as philosophy-free science; there is only science whose philosophical baggage is taken on board without examination.â[1]

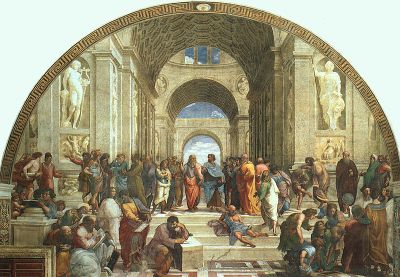

The Ancient Greek Period

Although existence of philosophy of science as a separate and self conscious branch of philosophy occurred relatively recentlyâwithin the last two or three centuries in Western thoughtâits beginnings go back to the beginning of philosophy altogether. The first epoch occurred in ancient Greek philosophy, in what is now known as the Pre-Socratic period, encompassing those philosophers now known as the Pre-Socratics: Thales, Anaximander, Anaximenes, Xenophanes, Pythagoras, Parmenides, Heraclitus, Anaxagoras, Empedocles, Democritus, and Protagoras, and going on to include the heights of Greek philosophy with Socrates, Plato, and Aristotle. The main emphasis of the Pre-Socratics was determining the basic elements of the universe: Proposals included water, air, fire, the boundless, numbers, atoms, and being itself. An attempt to find the ordering principle of existenceâor at least an ordering principleâwas undertaken; mind and mechanical causation were suggested. Later on in the Pre-Socratic period, with the arrival of the Sophists, attention shifted from nature to man; we could call this a movement away from natural science to social science.

Another central feature of the Pre-Socratic epoch was a movement away from a deocentric (God or gods-based) explanation of things to a naturalistic (nature-centered) one. One could say, for a salient example, that the first verse of Genesis in the Bible, "In the beginning God created the heavens and the earth," (Genesis 1:1), offered a deocentric account of the origin of everything, and implied, tacitly if not explicitly, that study of nature, or natural science, would need to begin with or at least make central reference and connection to theology. The Pre-Socratics, however, moved away from that view, and since their time there has been tension and openâand sometimes heated and hostileâdisagreement among philosophers, scientists, and theologians and religious-minded people about whether there is or should be a role for or connection between science and religion and between philosophy and theology.

Socrates and Plato were more known for their interests in ethics and politics than in natural philosophy, but they did make important and lasting contributions here too. One of those was a focus on definition, or drawing boundaries around things or concepts.

Socrates seems to have thought that to know is to be able to define. Later on, especially in the twentieth century, what is known as the demarcation problem became prominent in philosophy of science; this was the problem of drawing a hard or bright line between science and non-science.

Aristotleâs Philosophy of Science

Aristotle produced the first great philosophy of science, although his work in this field is widely denigrated today. Among other problems, his discussions about science were only qualitative, not quantitative, and he had little appreciation for mathematics. By the modern definition of the term, Aristotelian philosophy was not science, as this worldview did not attempt to probe how the world actually worked through experiment and empirical test. Rather, based on what one's senses told one, Aristotelian philosophy then depended upon the assumption that the human mind could elucidate all the laws of the universe, based on simple observation (without experimentation) through reason alone. In contrast, today the term science refers to the position that thinking alone often leads people astray, and therefore one must compare one's ideas to the actual world through experimentation; only then can one see if one's ideas are based in reality.

One of the reasons for Aristotleâs conclusions was that he held that physics was about changing objects with a reality of their own, whereas mathematics was about unchanging objects without a reality of their own. In this philosophy, he could not imagine that there was a relationship between them.

Aristotle presented a doctrine of their being four âcausesâ of things, but the word cause (Greek: αጰÏጱα, aitia) is not used in the modern sense of âcause and effect,â under which causes are events or states of affairs. Rather, the four causesâmaterial, formal, efficient, and final causesâare like different ways of explaining something; the material and formal causes are internal to the thing and separable only in thought; the efficient and final causes are external.

The material cause is the material that makes up an object, for example, "the bronze and silver ... are causes of the statue and the bowl." The formal cause is the blueprint or the idea commonly held of what an object should be. Aristotle says, "The form is the account (and the genera of the account) of the essence (for instance, the cause of an octave is the ratio two to one, and in general number), and the parts that are in the account." The efficient cause is the person who makes an object, or âunmoved moversâ (gods) who move nature. For example, âa father is a cause of his child; and in general the producer is a cause of the product and the initiator of the change is a cause.â This is closest to the modern definition of âcause.â The final cause or telos is the purpose or end that something is supposed to serve. This includes âall the intermediate steps that are for the end ... for example, slimming, purging, drugs, or instruments are for health; all of these are for the end, though they differ in that some are activities while others are instruments.â An example of an artifact that has all four causes would be a table, which has material causes (wood and nails), a formal cause (the blueprint, or a generally agreed idea of what tables are), an efficient cause (the carpenter), and a final cause (using it to dine on).

Aristotle argues that natural objects such as an "individual man" have all four causes. The material cause of an individual man would be the flesh and bone that make up an individual man. The formal cause would be the blueprint of man, which is used as a guide to create an individual man and to keep him in a certain state called man. The efficient cause of an individual man would be the father of that man, or in the case of all men an âunmoved moverâ who breathed (anima: breath) into the soul (anima: soul) of man. The final cause of man would be as Aristotle stated, âNow we take the humanâs function to be a certain kind of life, and take this life to be the soulâs activity and actions that express reason. Hence the excellent manâs function is to do this finely and well. Each function is completed well when its completion expresses the proper virtue. Therefore the human good turns out to be the soulsâ activity that expresses virtue.â

Aristotle also investigated movement and gravity. He did not know about the principle of inertia, and held that movement must always be caused by something.

The ancient Greeks, and especially Aristotle, noted the difference between natural objects and artificial ones or artifacts: Natural objects have self-movement, or the principle of their motion within them, whereas artificial things have their existence and motion through human agency. An elephant or an oak tree, for example, are natural objects that move from principles within them, while an automobile or a bicycle are artifacts that move by principles external to them and dependent on human agency.

The Copernican Revolution

The Copernican revolution in science, occurring roughly at the beginning of the sixteenth century, also had far-reaching consequences for philosophy. Until that time science and philosophy of science had operated by rational thought far more than by empirical observation. But the Copernican revolution, based as it was on observation of the world more than on thought and thought processes, meant that science became much more empirically and experimentally oriented than it had been in the Medieval-Aristotelian-Scholastic period, and a philosophy of science based on an acknowledgement of the primary roleâor at least the large roleâof observation and experiment in science came to the fore. Here a split between England and the European continent arose, with British philosophy becoming strongly empiricist, especially in the work of John Locke, George Berkeley, and David Hume, and continental philosophy being more rationalist, especially in the philosophy of Rene Descartes, Baruch Spinoza, and Gottfried Leibniz.

The Nineteenth Century

In the nineteenth century a great opposition arose between two camps of philosophers of science, and that opposition continued up into the post-World War II era .One group, often called inductivists, claimed that scientific hypothesis formation is an inductive process arising from observation of particular items of evidence, and that causation is nothing but observation of regularity. According to this view, a supposed inductive logic and procedure give rise to and justify hypotheses. John Stuart Mill can be taken as the nineteenth-century exemplar of that view; some (among many) others who took that position were Ernst Mach, Pierre Duhem, Philipp Frank, Carl Hempel, Rudolf Carnap, R.B. Braithwaite, and most members of the Vienna Circle. This group of philosophers is sometimes called reductionists, and their attitude reductionism. Other forms of this view are sometimes called instrumentalism, meaning that scientific theories are just instruments for making predictions, and operationalism, which claims to reduce all empirical statements to experimental operations. In addition, many exponents of this view, including Mill, held that the axioms of mathematics are nothing more than generalizations from experience. Later developers of set theory would try to prove â ultimately unsuccessfully â that mathematics could be derived solely from set theory and was thus only a branch of formal logic (this view of mathematics is sometimes called formalism), without any embodiment of any synthetic a priori knowledge, as Kant had held.

A second group of philosophers held that scientific knowledge is not derived solely from the senses but is a combination of sensation and ideas, and scientific hypotheses are not arrived at solely through inductive logic or procedures; the knower contributes something. In this view, âA scientist makes a discovery when he finds that he can, without strain, add an organizing idea to a multitude of sensations.â[2] William Whewell can be taken as the nineteenth-century exemplar of this view. This view is sometimes called realism because it holds that a scientific statement or hypothesis expresses something trueâsome realists have gone so far as to hold that it expresses a necessary truth and not just a contingent oneâabout a real external world, and not just something about the observerâs empirical experience. Ludwig Boltzmann, N.R. Campbell, and many of todayâs post-positivist philosophers who hold to some version of scientific realism can be understood as being in this camp.

Scientific realism is the view that the universe really is as explained by scientific statements. Realists hold that things like electrons and magnetic fields actually exist. It is naĂŻve in the sense of taking scientific models at face value, and is the view that many scientists adopt.

In contrast to realism, instrumentalism holds that our perceptions, scientific ideas and theories do not necessarily reflect the real world accurately, but are useful instruments to explain, predict and control our experiences. To an instrumentalist, electrons and magnetic fields are convenient ideas that may or may not actually exist. For instrumentalists, the empirical method is used to do no more than show that theories are consistent with observations. Instrumentalism is largely based on John Dewey's philosophy and, more generally, pragmatism, which was influenced by philosophers such as Charles Peirce and William James.

The empiricist/positivist attitude towards this nominalist-realist split was neatly summarized by Rudolf Carnap:

Empiricists are in general rather suspicious with respect to any kind of abstract entities like properties, classes, relations, numbers, propositions, etc. They usually feel much more in sympathy with nominalists than with realists (in the medieval sense). As far as possible they try to avoid any reference to abstract entities and to restrict themselves to what is sometimes called a nominalist language, i.e. one not containing such references.[3]

From the Nineteenth and Into the Twentieth Century: Positivism

Explanations of observations and discoveries that were offered by the scientific revolution were based on the characteristics, powers, and activities of nature itself andâsupposedly, anywayânot on any supernatural, transcendent, or occult powers or phenomena. If something was observed in nature but no natural explanation for it was available at the time, then it was assumed that a natural explanation existed and could be found later. In fact, the demarcation between science and non-science, especially between science, on the one hand, and religion and theology, on the other, was assumed to lie in this: It was supposed that scientific knowledge or claims could be justified or proved without reference to anything supernatural or transcendent, but religious and theological knowledge or claims necessarily relied on supposed knowledge of what is extra-natural or transcendent. In fact, theory of science, as it came to be developed, was primarily concerned to show both that and how science has certainty in its knowledge without reliance on any transcendent or revealed or metaphysical or occult knowledge.

One of the most widely asserted and held theories of science is known as positivism. This is, William Reese has written, "A family of philosophies characterized by an extremely positive evaluation of science and scientific method. In its earlier versions, the methods of science were held to have the potential not only of reforming philosophy but society as well.â[4]

Following his teacher Saint-Simon, French philosopher Auguste Comte (1789-1857) founded what he called the Positivistic Philosophy, which held, as Reese puts it, that "Every science, and every society, must pass through theological and metaphysical states or stages, on the way to the positive, scientific stage, which is their proper goal. In the theological stage explanations are given in terms of the gods. In the metaphysical stage explanations are given in terms of the most general abstractions. In the scientific stage explanations consist of correlating the facts of observation with each other."

Austrian physicist-philosopher Ernst Mach (1836-1916) held that "Explanation consists in calling attention to the sensations or 'neutral impressions' from which the concept was derived and for which it stands. Any concept which does not relate to sensations in this manner is metaphysical, and hence unacceptable." Moreover, "Scientific laws are abridged descriptions of past experience designed to assist us in predicting future experience. Such abridged descriptions are of the pattern of the past phenomena, and hence will be ... characteristic of future instances of the same kind." In addition, the theoretical entities of science, "should not be thought of as representing a world behind experience, but rather as instruments or tools helping us formulate the mathematical relations leading to predictions."[4] Indeed, Mach tried to get rid of unobservables altogether. He held that scientific investigations do not go past or beyond observation to any âheartâ of nature, and causality is merely the habit of mind in seeing constant conjunction, as was held by David Hume. The goal of scientific investigation, then, is to discover the relations between our sensations.

French philosopher Pierre Duhem (1861-1916) expressed views similar to those of Mach, holding âa physical theory to be a system of mathematical propositions representing a set of experimental lawsâŠâ and âThe object of science is to discover the relations holding among appearances.â[4] Science uses models, either actual ones or constructed ones, to represent what underlies the observable phenomena.

Mathematics and Science

A major problem for empiricist and positivist accounts of science and knowledge that hoped to eliminate metaphysics was posed by mathematics, for everyone recognized the central role that mathematics plays in science. German philosopher Immanuel Kantâwho, interestingly enough, called his own work a "Copernican revolution"âhad held that mathematics is both synthetic (meaning that its statements tell us something about the world, as opposed to analytic statements, which are true because of logic alone) and a priori (meaning that they are known to be true prior to empirical experience). If Kant's claim is true, then things can be known about the world before any observations are made. In addition, such notions as absolute equality or perfect circle cannot be discovered by experiential observation because there are no examples of perfect equality or perfect circle in the physical world. This shows that mathematics and geometry must be metaphysical in nature in that they cannot be reduced to or derived solely from the physical world.

This led to efforts to derive mathematics solely from logic, especially the logic of set theory, and thereby disprove Kant's claim that mathematics is synthetic. The set theory of German mathematician-logician Gottlob Frege (1848-1925) was the pioneering effort here, but British logician-philosopher Bertrand Russell (1872-1970) found a fatal mistake in Frege's work. This led Russell and his collaborator Alfred North Whitehead (1861-1947) to develop their own consistent mathematical logic/set theory, published as Principia Mathematica (3 vols.), and numerous people both before and since have worked on powerful forms of logic and mathematical logic. But the effort to reduce even simple arithmetic to axiomatic logic was shown decisively to be impossible by the work of Czech mathematician-logician Kurt Gödel (1906-1978). Thus the effort to show that mathematics need not be metaphysical has failed. Even today, some people still try to hold to empiricist, nominalist, or formalist accounts of mathematics, but those efforts too have not been successful. Thus, those who hold to a realist account of mathematicsâto the view that there is a real world of numbers and mathematical theorems and that mathematics cannot be reduced to generalizations from experienceâhave a great deal of evidence on their side.

Verificationism and the Vienna Circle

The reductionistic views of Mach and Duhem were well formulated by the beginning of the twentieth century and were highly influential in the developing philosophy of science. Indeed the marriage of Machâs positivism with the new powerful set-theoretic logic of Russell and Alfred North Whitehead fed into the rise of logical positivism, also known as logical atomism, expressed especially in the work of the members of the Vienna Circle. This was a movement centering on the University of Vienna in the 1920s and 1930s, led by Moritz Schlick (1882-1936). The goals of the Vienna Circle and the logical positivists were to develop a positivistic account of science, to unify all the sciences into one unified system, and to purify philosophy of all metaphysical elements, using logic as its means, and to thereby also purify science of anything other than logic and empirical observation. In other words, the logical positivists wished to draw a bright line or demarcation between science and logic, on the valued and esteemed side, and anything else on the other side, especially metaphysics and religion, and to banish everything that crossed that line because it was deemed to be literally meaningless.

English philosopher A. J. Ayer (1910-1989) met with the Vienna Circle and returned to England to write an enormously influential small book entitled Language, Truth, and Logic (1936); this book amounted to being a gospel tract for logical positivism. In it Ayer propounded what is known as the verification principle of meaning, according to which a statementâand science is expressed in statementsâis significant only if it is a statement of logic (i.e. an analytic statement) or it could be verified by experience, meaning that it was significant only if some empirical observation can be used to determine its truth or falsity. Statements that did not meet those criteria of being either analytic or empirically verifiable were judged to be nonsensical because they were not verifiable; such nonsensical and meaningless statements included religious, metaphysical, ethical, and aesthetic statements.

Ayer's book provided a trenchant and arresting summary of logical positivism. It was read and deeply absorbed by many thousands of peopleânot just philosophers, but many working scientists and othersâin the English-speaking world, and it had an enormous influence, so that, for a time, many people were converted to positivismâto the view that only analytic or empirical statements are meaningful, and that religious, metaphysical, ethical, and aesthetic statements are meaningless. In fact, some people, including some working scientists, still hold to that view even today.

Problems soon arose, however, about the verification principle itself: What is it and how shall it be expressed? It is not analytic because it cannot be derived from logic. But it is not empirical either, because it cannot be empirically discovered or verified. So what is it? If a strict adherence to the verification principle is maintained, then the verification principle itself is meaningless nonsense. That conclusion won't do, however, because the verification principle is meaningful. So, again, what is it? The correct answer is that it is, in fact, a metaphysical statement. But if it is both meaningful and metaphysical, this means that at least some metaphysical statements are meaningful, and that refutes the verification principle. In other words, investigation of the verification principle serves to refute that principle itself.

Proponents of logical positivism, when faced with this difficulty, resorted to saying that the verification principle should be accepted as a proposal. But then others â those who believed in religion, metaphysics, ethical, or aesthetic statements â could simply refuse to accept the positivists' recommendation. The greatest or most thorough proponents of logical positivism, such as Rudolf Carnap, Hans Reichenbach, Otto Neurath, and others, worked assiduouslyâwithout ultimate successâto find some form or statement of the verification principle, or confirmation principle as they tended to come to call it, that could be maintained.

Defenders of logical positivism also faced the problem that if the verification principle were made strong it would destroy science itself, and the philosophical defense of science was their major concern. One way of stating this problem is to note that scientific laws themselves go beyond the evidence for them. Take, for example, the scientific law-like statement: âPure water freezes at 0 degrees Celsius.â That is a simple statement about a physical property of water. But what is its evidential status? Have we frozen every sample of water in the universe? Clearly notâwe have frozen only a very small sample of all existent water. How do we know that all samples will have the same freezing temperature as the ones we have tested? We assume that all water is the same, but, in principle, we cannot empirically test that assumption. We also assume that samples of water from elsewhere in the universe, besides our planet, will melt at the same temperature. Why or what empirically verifiable principles allow us to make these assumptions?

In short, our scientific law statements themselves go beyond the evidence that we have tested in order to arrive at them because they necessarily make claims about untested things. Thus, these statements must be regarded as being, at least to some extent, metaphysical. As John Passmore has written, "Such [scientific] laws are, by the nature of the case, not conclusively verifiable; there is no set of experiences such that having these experiences is equivalent to the scientific law." So, in order to deal with this problem, the verification principle had to be made weak enough to admit this dimension of scientific statements. But if it were weakened in that way, then it would permit at least some other metaphysical statements, such as "Either it is raining or the Absolute is not perfect." All attempts to solve this problem of having a version of the verification principle (or confirmation principle) that admits all scientific statements but excludes all metaphysical statements have met with failure.

A big problem for positivist and verificationist accounts of science arose when philosophers pointed out the theory-dependence of observation. Observation involves perception, and so is a cognitive process. That is, one does not make an observation passively, but is actively involved in distinguishing the thing being observed from surrounding sensory data. Therefore, observations depend on some underlying understanding of the way in which the world functions, and that understanding may influence what is perceived, noticed, or deemed worthy of consideration. The Sapir-Whorf hypothesis provides an early version of this understanding of the impact of cultural artifacts on our perceptions of the world.

Empirical observation is supposedly used to determine the acceptability of some hypothesis within a theory. When someone claims to have made an observation, it is reasonable to ask them to justify their claim. Such a justification must make reference to the theory â operational definitions and hypotheses â in which the observation is embedded. That is, the observation is a component of the theory that also contains the hypothesis it either verifies or falsifies. But this means that the observation cannot serve as a neutral arbiter between competing hypotheses. Observation could only do this "neutrally" if it were independent of the theory. Still another problem for verificationism arose from the Quine-Duhem thesis, which pointed out that any theory could be made compatible with any empirical observation by the addition of suitable ad hoc hypotheses. This is analogous to the way in which an infinite number of curves can be drawn through any set of data points on a graph. Confirmation holism, developed by Willard Van Orman Quine, states that empirical data is not sufficient to make a judgment between theories. A theory can always be made to fit with the available empirical data.

That empirical evidence does not serve to determine between alternate theories does not imply that all theories are of equal value. Rather than pretending to use a universally applicable methodological principle, the scientist is making a personal choice when choosing one particular theory over another.

What all this means is that positivism, verificationism, or confirmationism cannot supply a means whereby we can draw a bright or sharp line between genuine or true science on one hand and metaphysics on the other. Science and metaphysics do merge into and encroach on each other, at least so far as any positivist, verificationist or confirmationist account of science can say.

Later in his life, Ayer himself admitted that the logical positivist program that he had so well described and championed was all wrong.

Mounting evidence against it from all these considerations, and more, led eventually to the demise of logical positivism and its program. As John Passmore wrote in the 1960s, âLogical positivism, then, is dead, or as dead as a philosophical movement ever becomes.â[5] Among other things, this means that the line between science and metaphysicsâand possibly even the line between science and religionâis not as clear or sharp as many people have wanted or supposed it to be.

Popper and Falsificationism

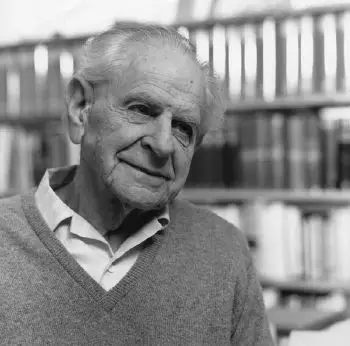

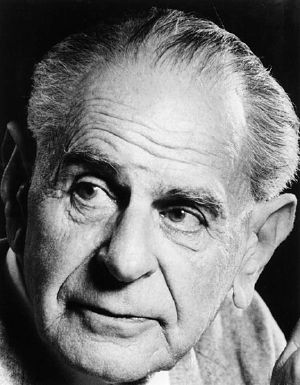

Austrian-English philosopher Karl Popper (1902-1994) responded to the positivists by proposing that both verificationism and inductivism should be replaced with falsificationism. Popper was not a member of the Vienna Circle, but he met with some of its members and discussed his work with them.

Early in his life Popper had become a Marxist and sometime later he became familiar with the work of Sigmund Freud and of psychologist Alfred Adler. But then Albert Einstein came and gave a lecture in Vienna, which Popper attended. He reports that he did not understand what Einstein was saying, but that he did recognize in Einstein âan attitude utterly different from the dogmatic attitude of Marx, Freud, Adler, and even more so than that of their followers. Einstein was looking for crucial experiments whose agreement with his predictions would by no means establish his theory, while a disagreement, as he was the first to stress, would show his theory to be untenable.â This led Popper to the insight that would, he wrote, guide his subsequent work in philosophy of science, "I arrived, by the end of 1919, at the conclusion that the scientific attitude was the critical attitude, which did not look for verifications but for crucial tests; tests which could refute the theory tested, although they could never establish it."[6]

Positivist theory of science had been based on a theory of verification, on the view that positive instances (observations or data), through a process of inductive logic or inductive method, would serve to establish and verify a scientific theory, for example, observation of white swans would serve to positively verify the theory âAll swans are white.â

Positivism and verificationism were, thus, based on acceptance of inductive logic and inductive methods. But inductive methods and inductive logic had already been severely criticized by Scottish philosopher David Hume (1711-1776), who, in his Treatise of Human Nature, had pointed out that inferences drawn from experiences in the past and present cannot be projected into the future unless one adopts a premise that the future will be like the past.

That premise that the future will be like the past, however, cannot itself be justified deductively, and any attempt to justify it inductively will lead to an infinite regress. Hume's general point was that there is no logical sanction for an inference from observed cases to unobserved ones.

A great deal of philosophers' thought and ink have been devoted to showing that Hume was wrong and that inductive inferences can be logically good ones, but all that effort ultimately has come to little avail. Some have gone so far as to declare that inductive logic is ipso facto valid because it is the necessary pillar of scientific inquiry and discovery, which is surely to get the cart before the horse! This "problem of induction" is sometimes called Hume's problem.

Popper was concerned to do two things: to find a demarcation between (good or true) science and pseudoscienceâa distinction that had both epistemological and ethical import for himâand to solve Hume's problem. He worked first on the problem of demarcation, and concluded that what separates true or genuine science from pseudoscienceâhere his favorite example of genuine science was the work of Einstein, and of pseudoscience Marxism (he had ceased being a Marxist by the beginning of his 20s) and psychoanalysisâis that genuine science makes predictions that can be tested; it is not dogmatic. According to this falsificationist criterion of demarcation, a theory is genuinely scientific if we can specify what would cause us to reject it.

Popperâs ultimate discovery, he claimed, was that adopting falsification, instead of verification, would solve both of those problems. "Only after some time did I realize that there was a close link, and that the problem of induction arose essentially from a mistaken solution to the problem of demarcationâfrom the belief that what elevated science over pseudoscience was the "scientific method" of finding true, secure, and justifiable knowledge, and that this method was the method of induction: a belief that erred in more ways than one."

Popper noticed a logical asymmetry between verification and falsification. Attempts at verification rely on the invalid and discredited inductive logic. But falsification relies on the deductive and wholly valid inference of Modus Tollens.

Modus tollens has the form:

- Premise 1: If P then Q.

- Premise 2: Q is false.

- Therefore, Conclusion: P is false.

In using this logical step in the testing of scientific theories, we begin with some purported scientific theory or law or law-like statement, such as, for example, "Silver melts at 961.93 degrees Celsius." From that we derive an observation or data statement; in our example it would be something like: "This sample of silver [that I am about to test] will melt at 961.93 degrees C." Then we perform the test. If it turns out that the silver in our test does not melt at 961.93 degrees C, then the original law-like statement or theory is falsified. If the sample does melt at the predicted temperature, then, tentatively, the law-like statement has passed the test and is not falsified. Popper never said that a test confirms a theory; he did not believe in theory confirmation.

Popper described falsifiability using the following observations, paraphrased from a 1963 essay on "Conjectures and Refutations":

- It is easy to confirm or verify nearly every theory â if we look for confirmations.

- Confirmations are significant only if they are the result of risky predictions; that is, if, unenlightened by the theory, we should have expected an event which was incompatible with the theory â an event which would have refuted the theory.

- "Good" scientific theories include prohibitions that forbid certain things to happen. The more a theory forbids, the better it is.

- A theory that is not refutable by any conceivable event is non-scientific. Irrefutability is not a virtue of a theory.

- Every genuine test of a theory is an attempt to falsify or refute it. Theories that take greater "risks" are more testable, and more exposed to refutation.

- Confirming or corroborating evidence is only significant when it is the result of a genuine test of the theory; "genuine" in this case means that it comes out of a serious but unsuccessful attempt to falsify the theory.

- Some genuinely testable theories, when found to be false, are still upheld by their advocatesâfor example by introducing ad hoc some auxiliary assumption, or by reinterpreting the theory ad hoc in such a way that it escapes refutation. Such a procedure is always possible, but it rescues the theory from refutation only at the price of destroying, or at least lowering, its scientific status.

Popper was adamant that passing a test does not verify a scientific theory or law. "I hold," he wrote, "that scientific theories are never fully justifiable or verifiable, but they are nevertheless testable." He held that all our scientific knowledge is tentative and uncertain, and that we test only those things we think need testing. Our knowledge, even the most strongly held scientific "law," is like "piles driven into a swamp," in his apt metaphor, because it is always subject to further testing should we think there is good reason to do so. We do rely, psychologically and tentatively, on those statements or theories that have passed many tests, but this never guarantees that such a theory will not fail in the future. When we step onto an airplane, for exampleâa new example, not Popper'sâwe know that this airplane has flown safely in the past, and in that sense it has passed many tests of its airworthiness, but that is never a guarantee that in the present case it will take us to our destination safely.

The process of attempted falsification, Popper held, solves Hume's problem because there is no induction, but, instead, science develops through the process of scientists making bold conjectures of theories and then testing them. He also held that theory proposal and theory testing are completely separate. There is no logic of finding and proposing theories; it is, he claimed, a mysterious and extra-logical process. But, he held, the process of testing a theory, once it is proposed, is a fully logical and objective process.

Almost all commentators have criticized naĂŻve or simple falsificationism, the view that a simple instance of disagreement or failed test will falsify a theory. But Popper himself had written in his first book Logik der Forschung, published in Vienna in 1934:

We must distinguish between falsifiability and falsification.... We say that a theory is falsified if we have accepted basic statements which contradict it. This condition is necessary, but not sufficient; for we have seen that non-reproducible single occurrences are of no significance to science. Thus a few basic statements contradicting a theory will hardly induce us to reject it as falsified. We shall take it as falsified only if we discover a reproducible effect which refutes the theory. In other words, we only accept the falsification if a low-level empirical hypothesis which describes such an effect is proposed and corroborated.[7]

Popper himself, and others such as Imre Lakatos, later discussed additional problems that show that simple falsificationism cannot succeed. First, it relied on a clear distinction between theoretical and observational terms or language. But various people, including Popper, N.R. Hanson, and others, showed that observation is theory-laden; there is no simple observation apart from a theory. Second, the objections against verificationism had shown that no proposition, including observation statements, could be proved decisively to be true by experiment. So in order to make simple falsification work we would have to rely on an experiment that would disprove the theory under investigation. But experiments cannot prove that either. Third, every test of a scientific theory relies on a ceteris paribus clause. (Latin for "all other things being equal." Also understood as "within the realm of margin of error" or "we assume for the time being.") So an attempted falsification couldâand would, in the case of a theory thought to be true or goodâbe dodged by saying that what had been falsified was not the central theory but some part of the ceteris paribus clause.

These observations led to a more subtle theory of falsification, usually called methodological falsificationism, and to a discussion of what were called "metaphysical research programs," or "scientific research programs." These programs contain the theory, plus a number of ceteris paribus clauses; one Popperian called this a theory plus its "protective belt." In this view, falsification is always tentative. If something seems to be falsified, we first look at the "protective belt" and try to modify it. Only as a last resort do we consider the theory itself to be falsified.

One telling objection to this is that methodological falsificationism cannot itself be falsified. There is no decision procedure to tell us when we should hold onto and when to give up and abandon a theory; we can always hold onto a seemingly falsified theory by declaring that it is not the theory but some part of the "protective belt" that has to go. Lakatos expressed it as "a game in which one has little hopes of winning [but]... it is still better to play than give up." In addition, there is little evidence from the history of scienceâSir John Eccles being a notable counterexampleâof any science procedure or research program being based on falsification, simple or methodological.

Popper attempted to solve this problem in later writings by proposing a sophisticated version of falsificationism in which a theory T1 is falsified only if three conditions are satisfied: (1) there exists a theory T2 that has excess empirical content over T1, meaning that it predicts novel facts not predicted by T1; (2) T2 explains everything that was explained by T1, and (3) some of the new predictions of T2 have been confirmed by experiment.

Thus in this sophisticated version of falsification a theory itself is not rejected by a falsification. Instead, we compare one theory with another, and we do not reject a theory until a better one comes along. So, Popper could claim, scientific knowledge grows through a process whereby a better theory replaces a previous one. The notion of a crucial falsifying experiment was discarded in favor of tests to decide between two or more competing theories. Moreover, in this view the notion of proliferating theoriesânumerous alternativesâbecomes important. So science does not deal with theories and falsifiers of those theories, but with rival theories.

But this takes us far from the original notion that the demarcation problem could be solved through falsificationism. The original idea had been that there was a simple methodological or logical methodâsometimes called a syntactic method, as opposed to a semantic oneâof making the demarcation. But that was true only for simple falsification, and we already have seen that simple falsificationism fails and cannot be maintained. But demarcation cannot be made using sophisticated falsificationism either because, in the sophisticated falsification model, we must make decisions, relying on convention or choice, between what constitutes "real" knowledge and what constitutes "background noise," and between what counts as a genuine falsification of the theory under consideration and what counts as just a nick in the "protective belt." In sophisticated falsificationism we can make the mistake of rejecting a true theory or the mistake of holding on too long and tenaciously to a bad or false theory, and the sophisticated falsificationist criteria themselves give us no sure logical or methodological means of showing just which of those we are doing in any particular instance.

Thus, what is science and what is pseudoscience depends in a central way on convention or decision. If that is so, then there can be unresolvable disagreements about what is genuine science and what is pseudoscience, and there is no fully reliable or methodological way of making this demarcation.

Imre Lakatos (1922-1974), a Hungarian refugee, brilliant mathematician, and philosopher of science, arrived in 1960 at the London School of Economics, where Popper was a professor. In the beginning Lakatos was a close associate of Popper and Popper praised him highly. But they had a falling outâafter which Popper bitterly denounced Lakatos and he became one of Popper's most devastating critics.

Popper had noted, and Lakatos agreed, that the probability of any theory based on evidence for it is zero, because there are an infinite number of theories that may account for any set of data. This can be seen by thinking of dots on graph paperâthose dots representing individual bits of dataâand then asking how many different lines can be drawn connecting all those dots. The answer is an infinite number. Thus the probability that any one of them is the correct one is zero, so inductivism cannot lead us to the right or correct theory, except through a chance that has a zero probability.

But, Lakatos noted, sophisticated falsificationism relies on conventionalism, and any convention can be saved through use of ad hoc hypotheses. No methodological rule exists for distinguishing decisively between genuine or necessary auxiliary hypotheses and degenerating or useless ad hoc ones, so conventionalism does not demarcate at all.

Finally, Lakatos turned to deeper investigation of the ceteris paribus clauses that always accompany any important theory. The ultimate conclusion is that falsificationism too fails in providing a demarcation criterion, and also fails in giving a general account of science and scientific discovery.

Thomas Kuhn

No book received more attention in post-World War II twentieth-century philosophy and history and sociology of science than Thomas S. Kuhnâs The Structure of Scientific Revolutions.[8] This was the one book that all philosophers, historians, and sociologists of science had to deal with, either positively or negatively, or simply because it was the most common.

Kuhn had come not from philosophy or scientific methodology, but from the history of science. Kuhn was also a post-positivist in that he did not accept the positivist or verificationist program, and he did not hold that science is a cumulative process. Instead, he argued that science goes through several kinds of episodes. In the normal course, science is organized around what Kuhn called a paradigm, and this paradigm guides ongoing work in the field at hand. This period was called ânormal scienceâ by Kuhn. But gradually over time there accumulates enough anomalies in that paradigm that the paradigm is deemed to be inadequate and a revolutionary episode occurs in which that (old) paradigm is given up and supplanted by a new one; Kuhn calls these episodes ârevolutionary science.â Moreover, Kuhn uses language much like what is used for religious conversions to describe the movement from one paradigm to a new one. Frequently it is younger people who can accept and use the new paradigm, while older ones are still married to the older pre-revolutionary paradigm and thus cannot undergo the conversion. Instead, they die off.

Kuhn summarized his thesis this way, âscientific revolutions are ⊠those non-cumulative developmental episodes in which an older paradigm is replaced in whole or in part by an incompatible new one.â[9] Paradigms are âaccepted examples of actual scientific practice â examples which include law, theory, application, and instrumentation together â [which] provide models from which spring particular coherent traditions of scientific research.â[10]

Kuhn contributed to the breakdown of the view that observation could adjudicate between two competing theories by denying that it is ever possible to isolate the theory being tested from the influence of the theory in which the observations are grounded. He argued that observations always rely on a specific paradigm, and that it is not possible to evaluate competing paradigms independently. More than one such paradigm, or logically consistent construct can each paint a usable likeness of the world, but it is pointless to pit them against each other, theory against theory. Neither is a standard by which the other can be judged. Instead, the question is which "portrait" is judged by some set of people to promise the most in terms of âpuzzle solving."

Several things must be noted about Kuhnâs view. First, he does not say that the new paradigm is âcloser to the truthâ than the old one; in fact, it is difficult to see how he can speak about âthe truthâ in any ultimate sense, even as a beacon toward which science aims. Second, his view is very much sociologically embedded and determined; it is the scientific community that accepts one paradigm and conducts normal science during that period, but then undergoes revolution and thereby adopts a new paradigm, and, following that, a new episode of normal science. Third, although he may or may not have wanted it this way, Kuhnâs account of science is deeply conventional: Science is whatever the reigning community holds it to be at any given time. But we must also note that, whatever conclusions they may have drawn about itâand conclusions differed a great dealâall philosophers of science did attend to Kuhnâs work at great length and it has provoked an immense outpouring of literature discussing it, both pro and con, from philosophers, sociologists of science, historians of science, and many others.

A major development in recent decades has been the study of the formation, structure, and evolution of scientific communities by sociologists and anthropologists including Michel Callon, Elihu Gerson, Bruno Latour, John Law, Susan Leigh Star, Anslem Strauss, Lucy Suchman, and others. Some of their work has been previously loosely gathered in actor network theory. Here the approach to the philosophy of science is to study how scientific communities actually operate.

The turn toward consideration of the scientific community has become much more pronounced in recent decades. One area of interest among historians, philosophers, and sociologists of science is the extent to which scientific theories are shaped by their social and political context. This approach is usually known as social constructivism. Social constructivism is in one sense an extension of instrumentalism that incorporates the social aspects of science. In its strongest form, it sees science as merely a discourse between scientists, with objective fact playing a small role if any. A weaker form of the constructivist position might hold that social factors play a large role in the acceptance of new scientific theories.

Demarcation Again

The failure of the demarcation attempts by both the positivists-verificationists and the falsificationists, led by Popper, leaves us with no methodological way of making any sharp demarcation between science and non-science. Both the positivist-verificationists and the falsificationists had attempted to provide a logical or quasi-logical methodology for making such a demarcation. But that has now collapsed, although some people still attempt to cling to the view that such a methodology exists, either through some form of verificationism or some form of falsificationsim. But Philosopher Michael Ruse, among many others, has noted that: âIt is simply not possible to give a neat definitionâspecifying necessary and sufficient characteristicsâwhich separates all and only those things that have ever been called âscienceâ. âŠThe concept âscienceâ is not as easily definable as, for example, the concept âtriangleâ.â

Amherst College philosopher Alexander George has written:

âŠthe intelligibility of that [demarcation] task depends on the possibility of drawing a line between science and non-science. The prospects for this are dim. Twentieth-century philosophy of science is littered with the smoldering remains of attempts to do just that. . . . Science employs the scientific method. No, there's no such method: Doing science is not like baking a cake. Science can be proved on the basis of observable data. No, general theories about the natural world can't be proved at all. Our theories make claims that go beyond the finite amount of data that we've collected. There's no way such extrapolations from the evidence can be proved to be correct. Science can be disproved, or falsified, on the basis of observable data. No, for it's always possible to protect a theory from an apparently confuting observation. Theories are never tested in isolation but only in conjunction with many other extra-theoretical assumptions (about the equipment being used, about ambient conditions, about experimenter error, etc.). It's always possible to lay the blame for the confutation at the door of one of these assumptions, thereby leaving one's theory in the clear. And so forth. . . . Let's abandon this struggle to demarcate and instead let's liberally apply the label 'science' to any collection of assertions about the workings of the natural world. âŠwhat has a claim to being taught in the science classroom isn't all science, but rather the best science, the claims about reality that we have strongest reason to believe are true....

He continues,

Science versus non-science seems like a much sharper dichotomy than better versus worse science. The first holds out the prospect of an 'objective' test, while the second calls for 'subjective' judgment. But there is no such test, and our reliance on judgment is inescapable. We should be less proprietorial about the unhelpful moniker 'science' but insist that only the best science be taught in our schools.[11]

This does not mean that âanything goes,â or that just anything can call itself science, despite Paul Feyerabendâs claim in his book Against Method. Ruse, for example, went on to claim, âScience is a phenomenon that has developed through the ages â dragging itself apart from religion, philosophy, superstition, and other bodies of human opinion and belief.â But that is a sociological and communal demarcation instead of a methodological one, dependent on what the community of scientists deems, at any given time, to be science and what it rules out as pseudoscience or non-science. That is not a fully satisfactory answer to those who want a strong line or demarcation between science and pseudoscience because what we are left with is conventionalistic instead of methodological, and the community may be wrong or biased or otherwise unreliable. But it seems to be the best answer now available.

Sociologists of science have spoken particularly to this issue. Paul Thagard, for example, has proposed a soft or sociological demarcation, as opposed to methodological one, based on how a particular group of theory proponents act as a community. Their proposal is pseudoscientific if:

- It is less progressive than alternative theories over a long period of time and faces many unsolved problems;

- The community of practitioners makes little attempt to develop the theory towards solutions of these problems;

- The community shows no concern for attempts to evaluate the theory in relation to others;

- The community is selective in considering confirmations and disconfirmations; and

- The community of practitioners does not apply appropriate safeguards against self-deception and known pitfalls of human perception. Those safeguards include peer review, blind or double blind studies, control groups, and other protections against expectation and confirmation bias.

But that set of criteria is itself questionable because it may not exclude things that many scientists want to rule out. Whether those criteria would exclude astrology, for example, is doubtful because for each point, astrologers could be found who take pains to satisfy it.

Science, Subjectivity, and Objectivity

Although they may have had different ways of attempting to accomplish it, one of the goals of positivism, verificationism, and falsificationism was to assert and preserve the ideal of the objectivity of science. But, in the face of devastating critiques of each, the collapse of the programs of positivism, verificationism, and falsificationism, along with the rise of sociological and communal accounts of science, seems to close the door to the objectivity of science and open the door to subjectivism. This became especially the case once it was shown that observation is theory laden and is something in which the observer is an active participant, not merely a passive receptor; the observing subject thus influences the outcome of the observation. In fact, some theorists, such as Feyerabend, have directly embraced subjectivism and declared science to be no different in principle from any other human subjective pursuit. That tack, however, has been resisted by others who still want to preserve the ideal of objectivity of science, even though there is no methodological way to guarantee objectivity.

One theorist who took a novel approach to this problem was Michael Polanyi, in his books Personal Knowledge and The Tacit Dimension. Polanyi declared that observation is a gestalt accomplishment, and that we always know more than we can say, so that the tacit dimension of knowing is greater than what we make explicit. Thus the subjective component is essential and central to perception and knowing. But, Polanyi held, we reach objectivity through that subjectivity because of a personal commitment that accompanies any of our declarations that something is true. By that, we make a commitment that what we know and declare to be true is something beyond our mere subjective knowing. We are, in effect, recommending to others when we declare something to be true that they also subjectively accept it. Unfortunately, Polanyi did not get much attention from theorists of science, but his work was influential in some other fields.

Most serious philosophers of science have deplored the retreat into subjectivism that has come about from the collapse mentioned above, and have attempted to find an objectivity to science even though this cannot be guaranteed by any methodological means or rubric. Larry Laudan has expressed his view on the problem in this way:

Feminists, religious apologists (including "creation scientists"), counterculturalists, neo-conservatives, and a host of other curious fellow-travelers have claimed to find crucial grist for their mills in, for instance, the avowed incommensurability and underdetermination of scientific theories. The displacement of the idea that facts and evidence matter by the idea that everything boils down to subjective interests and perspectives is...the most prominent and pernicious manifestation of anti-intellectualism in our time.[12]

He has also declared,

âInsofar as our concern is to protect ourselves and our fellows from the cardinal sin of believing what we wish were so rather than what there is substantial evidence for â and surely that is what most forms of âquackeryâ come down to â then our focus should be squarely on the empirical and conceptual credentials for claims about the world. The âscientificâ status of those claims is irrelevant.[13]

Reductionism in Science

One of the goals of some theorists has been reductionism. Reductionism in science can have several different senses. One type of reductionism is the belief that all fields of study are ultimately amenable to scientific explanation. Perhaps an historical event might be explained in sociological and psychological terms, which in turn might be described in terms of human physiology, which in turn might be described in terms of chemistry and physics. The historical event will have been reduced to a physical event. This might be seen as implying that the historical event was 'nothing but' the physical event, denying the existence of emergent phenomena.

Daniel Dennett invented the term âgreedy reductionismâ to describe the assumption that such reductionism was possible. He claims that it is just âbad science,â seeking to find explanations that are appealing or eloquent, rather than those that are of use in predicting natural phenomena.

Arguments made against greedy reductionism through reference to emergent phenomena rely upon the fact that self-referential systems can be said to contain more information than can be described through individual analysis of their component parts. Analysis of such systems is necessarily information-destructive because the observer must select a sample of the system that can be at best partially representative. Information theory can be used to calculate the magnitude of information loss and is one of the techniques applied by chaos theory.

Continental Philosophy of Science

Although the members of the Vienna Circle were from Continental Europe, the logical positivists and their program became mostly an Anglo-American phenomenon, especially with the coming of the Nazis and World War II. In the Continental philosophical tradition, science has been viewed more from a world-historical perspective than from a methodological one. One of the first philosophers who supported this view was Georg Wilhelm Friedrich Hegel. Philosophers such as Ernst Mach, Pierre Duhem and Gaston Bachelard also wrote their works with this world-historical approach to science. Two other approaches to science include Edmund Husserl's phenomenology and Martin Heidegger's hermeneutics. All of these approaches involve a historical and sociological turn to science, with a special emphasis on lived experience (Husserlian "life-world" or Heideggerian "existential" approach), rather than a progress-based or anti-historical approach as was done in the analytic tradition.

See also

|

|

Some of the major contributors to philosophy of science

|

|

Notes

- â Daniel Dennett, Darwin's Dangerous Idea (New York: Simon & Schuster, 1996, ISBN 068482471X).

- â R. HarrĂ©, âHistory of Philosophy of Science,â in Paul Edwards (ed.), Encyclopedia of Philosophy Vol. 6 (Pearson College Division, 1967, ISBN 978-0028949505), 289.

- â Rudolf Carnap, Empiricism, Semantics, and Ontology Revue Internationale de Philosophy IV (1950): 20-40. Retrieved November 22, 2021.

- â 4.0 4.1 4.2 William L. Reese, Dictionary of Philosophy and Religion: Eastern and Western Thought (Atlantic Highlands, NJ: Humanities Press International, 1996, ISBN 0391038648), 596.

- â âLogical Positivismâ in Paul Edwards (ed.), Encyclopedia of Philosophy Vol. 5 (Pearson College Division, 1967, ISBN 978-0028949505), 56.

- â Karl R. Popper, Unended Quest: An Intellectual Autobiography (La Salle, IL: Open Court Publishing Company, 1982, ISBN 0875483437).

- â Translated and published in English in the mid-1950s as The Logic of Scientific Discovery (London: Routledge, 2002, ISBN 0415278449).

- â Thomas S. Kuhn,The Structure of Scientific Revolutions (Chicago: University of Chicago Press, 1996, ISBN 0226458083).

- â Kuhn 1962, 91.

- â Kuhn 1962, 10.

- â Alexander George, What's wrong with intelligent design and with its critics Christian Science Monitor, December 22, 2005. Retrieved November 22, 2021

- â Larry Laudan, Science and Relativism: Some Key Controversies in the Philosophy of Science (Chicago: University of Chicago Press, 1990, ISBN 0226469492).

- â Larry Laudan, âThe Demise of the Demarcation Problem,â in Michael Ruse (ed.), But is it Science?: The Philosophical Question in the Creation/Evolution Controversy (Amherst, NY: Prometheus Books, 1996, ISBN 1573920878).

ReferencesISBN links support NWE through referral fees

- Boyd, R., Paul Gasper, and J. D. Trout (eds.). The Philosophy of Science. Cambridge, MA: MIT Press,1991. ISBN 0262521563

- Dennett, Daniel. Darwin's Dangerous Idea. New York: Simon & Schuster, 1996. ISBN 068482471X

- Edwards, Paul (ed.). Encyclopedia of Philosophy Vol. 6. Pearson College Division, 1967. ISBN 978-0028949505

- Glazebrook, Trish. Heidegger's Philosophy of Science. Bronx, NY: Fordham University Press, 2000. ISBN 0823220389

- Gutting, Gary. Continental Philosophy of Science. Blackwell Publishers, 2004. ISBN 0631236090

- Harré, R. The Philosophies of Science: An Introductory Survey. London: Oxford University Press, 1972.

- Kearney, R.' 'Routledge History of Philosophy. London: Routledge, 1994. ISBN 0415056292

- Klemke, E. et. al. (ed.). Introductory Readings in The Philosophy of Science. Amherst, NY: Prometheus Books, 1998.

- Kuhn, Thomas S. The Structure of Scientific Revolutions. Third edition, 1996. Chicago: University of Chicago Press, 1962. ISBN 0226458083

- Kuipers, T. A. F. Structures in Science. An Advanced Textbook in Neo-Classical Philosophy of Science. New York: Synthese Library, Springer, 2001. ISBN 0792371178

- Ladyman, J. Understanding Philosophy of Science. London: Routledge, 2002. ISBN 0415221579

- Lakatos, Imre, and Alan Musgrave (eds.). Criticism and the Growth of Knowledge. Cambridge: Cambridge University Press, 1970. ISBN 0521096235

- Laudan, Larry. Science and Relativism: Some Key Controversies in the Philosophy of Science (Chicago: University of Chicago Press, 1990. ISBN 0226469492

- Losee, John. A Historical Introduction to The Philosophy of Science. Fourth edition, 2001. New York: Oxford University Press, 1972. ISBN 0198700555

- Newton-Smith, W. H. (ed.). A Companion To The Philosophy of Science. Blackwell Companions to Philosophy. Oxford: Blackwell Publishers, 2001. ISBN 0631230203

- Niiniluoto, Ilkka. Critical Scientific Realism. New York: Oxford University Press, 2002. ISBN 0199251614

- Pap, Arthur. An Introduction to the Philosophy of Science. Glencoe, IL: The Free Press, 1962.

- Papineau, David (ed.). The Philosophy of Science. Oxford Readings in Philosophy. New York: Oxford University Press, 1996. ISBN 0198751656

- Popper, Karl R. Unended Quest: An Intellectual Autobiography. La Salle, IL: Open Court Publishing Company, 1982. ISBN 0875483437

- Popper Karl R. The Logic of Scientific Discovery. London: Routledge, 2002. ISBN 0415278449

- Reese, William L. Dictionary of Philosophy and Religion: Eastern and Western Thought. Atlantic Highlands, NJ: Humanities Press International, 1996. ISBN 0391038648

- Rorty, Richard (ed.). The Linguistic Turn: Essays in Philosophical Method. Reprint edition, 1992. Chicago: University of Chicago Press, 1967. ISBN 0226725693

- Rosenberg, Alex. Philosophy of Science: A Contemporary Introduction. Second edition, 2005. London: Routledge, 2000. ISBN 0415343178

- Ruse,Michael (ed.). But is it Science?: The Philosophical Question in the Creation/Evolution Controversy. Amherst, NY: Prometheus Books, 1996. ISBN 1573920878

- Salmon, M. H., et al. Introduction to the Philosophy of Science: A Text By Members of the Department of the History and Philosophy of Science of the University of Pittsburgh. Indianapolis, IN: Hackett Publishing Company, 1999. ISBN 0872204502

- Snyder, Paul. Toward One Science: The Convergence of Traditions. Palgrave MacMillan, 1977. ISBN 0312810121

- Suppe, Frederick (ed.). The Structure of Scientific Theories, Second Edition. Second edition, 1979. Urbana, IL: University of Illinois Press, 1977. ISBN 0252006348

- Van Fraassen, Bas C. The Scientific Image. Oxford: Clarendon Press, 1980. ISBN 0198244274

External links

All links retrieved November 23, 2022.

- PhilSci Archive.

- Science and Knowledge One view on the philosophy of science

General Philosophy Sources

- Stanford Encyclopedia of Philosophy

- Paideia Project Online

- The Internet Encyclopedia of Philosophy

- Project Gutenberg

Credits

New World Encyclopedia writers and editors rewrote and completed the Wikipedia article in accordance with New World Encyclopedia standards. This article abides by terms of the Creative Commons CC-by-sa 3.0 License (CC-by-sa), which may be used and disseminated with proper attribution. Credit is due under the terms of this license that can reference both the New World Encyclopedia contributors and the selfless volunteer contributors of the Wikimedia Foundation. To cite this article click here for a list of acceptable citing formats.The history of earlier contributions by wikipedians is accessible to researchers here:

The history of this article since it was imported to New World Encyclopedia:

Note: Some restrictions may apply to use of individual images which are separately licensed.