Perception

In contemporary psychology, perception is defined as the brain’s interpretation of sensory information so as to give it meaning. Cognitive sciences make the understanding of perception more detailed: Perception is the process of acquiring, interpreting, selecting, and organizing sensory information. Many cognitive psychologists hold that, as we move about in the world, we create a model of how the world works. That means, we sense the objective world, but our sensations map to percepts, and these percepts are provisional, in the same sense that the scientific methods and scientific hypotheses can be provisional.

Organization of Perception

Our perception of the external world begins with the senses, which lead us to generate empirical concepts representing the world around us, within a mental framework relating new concepts to preexisting ones. Perception takes place in the brain. Using sensory information as raw material, the brain creates perceptual experiences that go beyond what is sensed directly. Familiar objects tend to be seen as having a constant shape, even though the retinal images they cast change as they are viewed from different angles. It is because our perceptions have the quality of constancy, which refers to the tendency to sense and perceive objects as relatively stable and unchanging despite changing sensory stimulation and information.

Once we have formed a stable perception of an object, we can recognized it from almost any position, at almost any distance, and under almost any illumination. A white house looks like a white house by day or by night and from any angle. We see it as the same house. The sensory information may change as illumination and perspective change, but the object is perceived as constant.

The perception of an object as the same regardless of the distance from which it is viewed is called size constancy. Shape constancy is the tendency to see an object as the same no matter what angle it is viewed from. Color constancy is the inclination to perceive familiar objects as retaining their color despite changes in sensory information. Likewise, when saying brightness constancy, we understand the perception of brightness as the same, even though the amount of light reaching the retina changes. Size, shape, brightness, and color constancies help us better to understand and relate to world. Psychophysical evidence denotes that without this ability, we would find the world very confusing.

Psychologists and other cognitive scholars usually categorize perception as internal and external. Internal perception (proprioception) tells us what's going on in our bodies. We can sense where our limbs are, whether we're sitting or standing; we can also sense whether we are hungry, or tired, and so forth. External perception or sensory perception, i.e., exteroception, tells us about the world outside our bodies. Using our senses of sight, hearing, touch, smell, and taste, we discover colors, sounds, textures, etc. of the world at large. There is a growing body of knowledge of the mechanics of sensory processes in cognitive psychology. The philosophy of perception is mainly concerned with exteroception. When philosophers use the word perception they usually mean exteroception, and the word is used in that sense everywhere.

Visual perception

In psychology, visual perception is the ability to interpret visible light information reaching the eyes which is then made available for planning and action. The resulting perception is also known as eyesight, sight or vision. The various components involved in vision are known as the visual system.

of colors, perception of darkness, the psychological explanation of the moon illusion, and binocular vision.[2]

Hermann von Helmholtz is often credited with the first study of visual perception in modern times. Helmholtz held vision to be a form of unconscious inference: vision is a matter of deriving a probable interpretation for incomplete data.

Inference requires prior assumptions about the world: two well-known assumptions that we make in processing visual information are that light comes from above, and that objects are viewed from above and not below. The study of visual illusions (cases when the inference process goes wrong) has yielded much insight into what sort of assumptions the visual system makes.

The unconscious inference hypothesis has recently been revived in so-called Bayesian studies of visual perception. Proponents of this approach consider that the visual system performs some form of Bayesian inference to derive a perception from sensory data. Models based on this idea have been used to describe various visual subsystems, such as the perception of motion or the perception of depth.[3][4]

Gestalt psychologists working primarily in the 1930s and 1940s raised many of the research questions that are studied by vision scientists today. The Gestalt Laws of Organization have guided the study of how people perceive visual components as organized patterns or wholes, instead of many different parts. Gestalt is a German word that translates to "configuration or pattern." According to this theory, there are six main factors that determine how we group things according to visual perception: Proximity, Similarity, Closure, Symmetry, Common fate and Continuity.

The major problem with the Gestalt laws (and the Gestalt school generally) is that they are descriptive not explanatory. For example, one cannot explain how humans see continuous contours by simply stating that the brain "prefers good continuity." Computational models of vision have had more success in explaining visual phenomena[5] and have largely superseded Gestalt theory.

Visual Illusions

At times our perceptual processes trick us into believing that an object is moving when, in fact, it is not. There is a difference, then, between real movement and apparent movement. Examples of apparent movement are autokinetic illusion, stroboscopic motion, and phi phenomena. Autokinetic illusion is the sort of perception when a stationary object is actually moving. Apparent movement that results from flashing a series of still pictures in rapid succession, as in a motion picture, is called stroboscopic motion. Phi phenomenon is the apparent movement caused by flashing lights in sequence, as on theater marquees. Visual illusions occur when we use a variety of sensory cues to create perceptual experiences that not actually exist. Some are physical illusions, such as the bent appearance of a stick in water. Others are perceptual illusions, which occur because a stimulus contains misleading cues that lead to an inaccurate perceptions. Most of stage magic is based on the principles of physical and perceptual illusion.

Color perception

Color vision is the capacity of an organism or machine to distinguish objects based on the wavelengths (or frequencies) of the light they reflect or emit. The nervous system derives color by comparing the responses to light from the several types of cone photoreceptors in the eye. These cone photoreceptors are sensitive to different portions of the visible spectrum. For humans, the visible spectrum ranges approximately from 380 to 750 nm, and there are normally three types of cones. The visible range and number of cone types differ between species.

A 'red' apple does not emit red light. Rather, it simply absorbs all the frequencies of visible light shining on it except for a group of frequencies that is perceived as red, which are reflected. An apple is perceived to be red only because the human eye can distinguish between different wavelengths. Three things are needed to see color: a light source, a detector (e.g. the eye) and a sample to view.

The advantage of color, which is a quality constructed by the visual brain and not a property of objects as such, is the better discrimination of surfaces allowed by this aspect of visual processing.

In order for animals to respond accurately to their environments, their visual systems need to correctly interpret the form of objects around them. A major component of this is perception of colors.

Perception of color is achieved in mammals through color receptors containing pigments with different spectral sensitivities. In most primates closely related to humans there are three types of color receptors (known as cone cells). This confers trichromatic color vision, so these primates, like humans, are known as trichromats. Many other primates and other mammals are dichromats, and many mammals have little or no color vision.

In the human eye, the cones are maximally receptive to short, medium, and long wavelengths of light and are therefore usually called S-, M-, and L-cones. L-cones are often referred to as the red receptor, but while the perception of red depends on this receptor, microspectrophotometry has shown that its peak sensitivity is in the greenish-yellow region of the spectrum.

The peak response of human color receptors varies, even amongst individuals with 'normal' color vision;[6] in non-human species this polymorphic variation is even greater, and it may well be adaptive.[7]

Chromatic adaptation

An object may be viewed under various conditions. For example, it may be illuminated by the sunlight, the light of a fire, or a harsh electric light. In all of these situations, human vision perceives that the object has the same color: an apple always appears red, whether viewed at night or during the day. On the other hand, a camera with no adjustment for light may register the apple as having many different shades. This feature of the visual system is called chromatic adaptation, or color constancy; when the correction occurs in a camera it is referred to as white balance.

Chromatic adaptation is one aspect of vision that may fool someone into observing an color-based optical illusion. Though the human visual system generally does maintain constant perceived color under different lighting, there are situations where the brightness of a stimulus will appear reversed relative to its "background" when viewed at night. For example, the bright yellow petals of flowers will appear dark compared to the green leaves in very dim light. The opposite is true during the day. This is known as the Purkinje effect, and arises because in very low light, human vision is approximately monochromatic and limited to the region near a wavelength of 550nm (green).

Perception of Distance and Depth

We are constantly judging the ‘’distance’’ between ourselves and other objects and we use many cues to determine the distance and the depth of objects. Some of these cues depend on visual messages that one eye alone can transmit: these are called ’’monocular cues’’. Others known as ‘’binocular cues’’ require the use of both eyes. Having two eyes allows us to make more accurate judgments about ‘’distance’’ and ‘’depth’’, particularly, when the objects are relatively close. Depth perception is the visual ability to perceive the world in three dimensions. It is a trait common to many higher animals. Depth perception allows the beholder to accurately gauge the distance to an object.

Depth perception combines several types of depth cues grouped into two main categories: monocular cues (cues available from the input of just one eye) and binocular cues (cues that require input from both eyes).

Monocular cues

- Motion parallax - When an observer moves, the apparent relative motion of several stationary objects against a background gives hints about their relative distance. This effect can be seen clearly when driving in a car nearby things pass quickly, while far off objects appear stationary. Some animals that lack binocular vision due to wide placement of the eyes employ parallax more explicitly than humans for depth cueing (e.g. some types of birds, which bob their heads to achieve motion parallax, and squirrels, which move in lines orthogonal to an object of interest to do the same).1

- Depth from motion - A form of depth from motion, kinetic depth perception, is determined by dynamically changing object size. As objects in motion become smaller, they appear to recede into the distance or move farther away; objects in motion that appear to be getting larger seem to be coming closer. This a form of kinetic depth perception. Using kinetic depth perception enables the brain to calculate time to crash distance (TTC) at a particular velocity. When driving, we are constantly judging the dynamically changing headway (TTC) by kinetic depth perception.

- Color vision - Correct interpretation of color, and especially lighting cues, allows the beholder to determine the shape of objects, and thus their arrangement in space. The color of distant objects is also shifted towards the blue end of the spectrum. (e.g. distant mountains.) Painters, notably Cezanne, employ "warm" pigments (red, yellow and orange) to bring features forward towards the viewer, and "cool" ones (blue, violet, and blue-green) to indicate the part of a form that curves away from the picture plane.

- Perspective - The property of parallel lines converging at infinity allows us to reconstruct the relative distance of two parts of an object, or of landscape features.

- Relative size - An automobile that is close to us looks larger than one that is far away; our visual system exploits the relative size of similar (or familiar) objects to judge distance.

- Distance fog - Due to light scattering by the atmosphere, objects that are a great distance away look hazier. In painting, this is called "atmospheric perspective." The foreground is sharply defined; the background is relatively blurred.

- Depth from Focus - The lens of the eye can change its shape to bring objects at different distances into focus. Knowing at what distance the lens is focused when viewing an object means knowing the approximate distance to that object.

- Occlusion - Occlusion (blocking the sight) of objects by others is also a clue which provides information about relative distance. However, this information only allows the observer to create a "ranking" of relative nearness.

- Peripheral vision - At the outer extremes of the visual field, parallel lines become curved, as in a photo taken through a fish-eye lens. This effect, although it's usually elimated from both art and photos by the cropping or framing of a picture, greatly enhances the viewer's sense of being positioned within a real, three dimensional space. (Classical perspective has no use for this so-called "distortion," although in fact the "distortions" strictly obey optical laws and provide perfectly valid visual information, just as classical perspective does for the part of the field of vision that falls within its frame.)

- Texture gradient - Suppose you are standing on a gravel road. The gravel near you can be clearly seen in terms of shape, size and colour. As your vision shifts towards the distant road the texture cannot be clearly differentiated.

Binocular and occulomotor cues

- Stereopsis/Retinal disparity - Animals that have their eyes placed frontally can also use information derived from the different projection of objects onto each retina to judge depth. By using two images of the same scene obtained from slightly different angles, it is possible to triangulate the distance to an object with a high degree of accuracy. If an object is far away, the disparity of that image falling on both retinas will be small. If the object is close or near, the disparity will be large. It is stereopsis that tricks people into thinking they perceive depth when viewing Magic Eyes, Autostereograms, 3D movies and stereoscopic photos.

- Accommodation - This is an oculomotor cue for depth perception. When we try to focus on far away objects, the ciliary muscles stretches the eye lens, making it thinner. The kinesthetic sensations of the contracting and relaxing ciliary muscles (intraocular muscles) is sent to the visual cortex where it is used for interpreting distance/depth.

- Convergence - This is also an oculomotor cue for distance/depth perception. By virtue of stereopsis the two eye balls focus on the same object. In doing so they converge. The convergence will stretch the extraocular muscles. Kinesthetic sensations from these extraocular muscles also help in depth/distance perception. The angle of convergence is larger when the eye is fixating on far away objects.

Of these various cues, only convergence, focus and familiar size provide absolute distance information. All other cues are relative (ie, they can only be used to tell which objects are closer relative to others). Stereopsis is merely relative because a greater or lesser disparity for nearby objects could either mean that those objects differ more or less substantially in relative depth or that the foveated object is nearer or further away (the further away a scene is, the smaller is the retinal disparity indicating the same depth difference).

Binocular cues can be directly perceived far more easily and eloquently than they can be described in words. Try looking around at the room you're in with just one eye open. Then look with just the other eye; the difference you notice will probably be negligible. After that, open both eyes, and see what happens.

Depth perception in art

As art students learn, there are several ways in which perspective can help in estimating distance and depth. In linear perspective, two parallel lines that extend into the distance seem to come together at some point in the horizon. In aerial perspective, distant objects have a hazy appearance and a somewhat blurred outline. The elevation of an object also serves as a perspective cue to depth.

Trained artists are keenly aware of the various methods for indicating spacial depth (color shading, distance fog], perspective and relative size), and take advantage of them to make their works appear "real." The viewer feels it would be possible to reach in and grab the nose of a Rembrandt portrait or an apple in a Cezanne still life—or step inside a landscape and walk around among its trees and rocks.

Photographs capturing perspective are two-dimensional images that often illustrate the illusion of depth. (This differs from a painting, which may use the physical matter of the paint to create a real presence of convex forms and spacial depth.) Stereoscopes and Viewmasters, as well as Three-dimensional movies, employ binocular vision by forcing the viewer to see two images created from slightly different positions (points of view). By contrast, a telephoto lens— used in televised sports, for example, to zero in on members of a stadium audience—has the opposite effect. The viewer sees the size and detail of the scene as if it were close enough to touch, but the camera's perspective is still derived from its actual position a hundred meters away, so background faces and objects appear about the same size as those in the foreground.

Stereopsis is depth perception from binocular vision through exploitation of parallax. Depth perception does indeed rely primarily on binocular vision, but it also uses many other monocular cues to form the final integrated perception. There are monocular cues that would be significant to a "one-eyed" person, and more complex inferred cues, that require both eyes to be perceiving stereo while the monocular cues are noted. This third group relies on processing within the brain of the person, as they see a full field of view with both eyes. Medical sciences know a few disorders which affect depth perception. Ocular conditions, such as amblyopia, optic nerve hypoplasia, and strabismus may reduce the perception of depth.

In the end World War II, Merleau-Ponty – a French philosopher – published Phenomenology of Perception. This book has largely influenced those visual artists (e.g., American Minimalist Group), who were experimenting with the use of space and whose aim was to create simple but highly conceptual works, which had to confront the audience and make them aware of their presence in the exhibition’s space. In his book, Merleau-Ponty wrote: "All my knowledge of the world.... is gained from my own particular point of view, or from some experience of the world without which the symbols of science would be meaningless". And also: "I am the absolute source, my existence does not stem from my antecedents, from my physical and social environment; instead it moves out towards them and sustains them, for I alone bring into being for myself.....the horizon whose distance from me would be abolished ....if I were not there to scan it with my gaze".

Many artists applied such theories to their own aesthetics and started to focus on the importance of human’s point of view (and its mutability) for a wider understanding of reality. They began to conceive the audience, and the exhibition’s space, as fundamental parts of the artwork, creating a kind of mutual communication between them and the viewer, who ceased, therefore, to be a mere addressee of their message. As a result, the cell, which is originally meant to be self-contained and isolated, becomes a place of interaction, which, obviously never becomes total (since the viewer is forced to remain external to it) but asks for a complex process of self-consciousness aimed at a broader comprehension of one’s own experience of suffering from the impossibility to fully look at and to fully perceive oneself.

Perception of Movement

The perception of movement is a complicated process involving both visual information from the retina and messages from the muscles around the eyes as they follow an object. Movement refers to the physical displacement of an object from one position to another. The perception of movement depends in part on movement of image across the retina of the eye. If you stand still and move your head to look around you, the images of all the objects in the room will pass across your retina. Yet you will perceive all the objects as stationary. Even if you hold your head still and move only your eyes, the images will continue to pass across your retina. But the messages from the eye muscles seem counteract those from the retina, so the objects in the room will be perceived as motionless. Motion perception is the process of inferring the speed and direction of objects and surfaces that move in a visual scene given some visual input. Although this process appears straightforward to most observers, it has proven to be a difficult problem from a computational perspective, and extraordinarily difficult to explain in terms of neural processing. Motion perception is studied by many disciplines, including psychology, neuroscience, neurophysiology, and computer science.

First-order motion perception refers to the perception of the motion of an object that differs in luminance from its background, such as a black bug crawling across a white page. This sort of motion can be detected by a relatively simple motion sensor designed to detect a change in luminance at one point on the retina and correlate it with a change in luminance at a neighbouring point on the retina after a delay. Sensors that work this way have been referred to as Reichardt detectors (after the scientist W. Reichardt, who first modelled them), [8] motion-energy sensors, [9] or Elaborated Reichardt Detectors. [10] These sensors detect motion by spatio-temporal correlation and are plausible models for how the visual system may detect motion. Debate still rages about the exact nature of this process. First-order motion sensors suffer from the aperture problem, which means that they can detect motion only perpendicular to the orientation of the contour that is moving. Further processing is required to disambiguate true global motion direction.

In Second-order motion the moving contour is defined by contrast, texture, flicker or some other quality that does not result in an increase in luminance or motion energy in the Fourier spectrum of the stimulus.[11][12] There is much evidence to suggest that early processing of first- and second-order motion is carried out by separate pathways.[13] Second-order mechanisms have poorer temporal resolution and are low-pass in terms of the range of spatial frequencies that they respond to. Second-order motion produces a weaker motion aftereffect unless tested with dynamically flickering stimuli.[14] First and second-order signals appear to be fully combined at the level of Area MT/V5 of the visual system.

Having extracted motion signals (first- or second-order) from the retinal image, the visual system must integrate those individual local motion signals at various parts of the visual field into a 2-dimensional or global representation of moving objects and surfaces.

Amodal perception

Amodal perception is the term used to describe the full perception of a physical structure when it is only partially perceived. For example, a table will be perceived as a complete volumetric structure even if only part of it is visible; the internal volumes and hidden rear surfaces are perceived despite the fact that only the near surfaces are exposed to view, and the world around us is perceived as a surrounding void, even though only part of it is in view at any time.

Formulation of the theory is credited to the Belgian psychologist Albert Michotte and Italian psychologist Fabio Metelli, with their work developed in recent years by E.S. Reed and Gestaltists.

Modal completion is a similar phenomena in which a shape is perceived to be occluding other shapes even when the shape itself is not drawn. Examples include the triangle that appears to be occluding three disks in the Kanizsa triangle and the circles and squares that appear in different versions of the Koffka cross.

Haptic perception

Gibson (1966) defines the haptic system as "The sensibility of the individual to the world adjacent to his body by use of his body." The haptic perceptual system is unusual in that it can include the sensory receptors from the whole body and is closely linked to the movement of the body so can have a direct effect on the world being perceived. The concept of haptic perception is closely allied to the concept of active touch that realizes that more information is gathered when a motor plan (movement) is associated with the sensory system, and that of extended physiological proprioception a realization that when using a tool such as a stick, the perception is transparently transferred to the end of the tool.

It has been found recently (Robles-De-La-Torre & Hayward, 2001) that haptic perception strongly relies on the forces experienced during touch. This research allows the creation of "virtual," illusory haptic shapes with different perceived qualities (see "The Cutting edge of haptics").

Interestingly, the capabilities of the haptic sense, and of somatic sense in general have been traditionally underrated. In contrast to common expectation, loss of the sense of touch is a catastrophic deficit. It makes it almost impossible to walk or perform other skilled actions such as holding objects or using tools (Robles-De-La-Torre 2006). This highlights the critical and subtle capabilities of touch and somatic senses in general. It also highlights the potential of haptic technology.

Speech perception

Speech perception refers to the processes by which humans are able to interpret and understand the sounds used in language. The study of speech perception is closely linked to the fields of phonetics and phonology in linguistics and cognitive psychology and perception in psychology. Research in speech perception seeks to understand how human listeners recognize speech sounds and use this information to understand spoken language. Speech research has applications in building computer systems that can recognize speech, as well as improving speech recognition for hearing- and language-impaired listeners.

The process of perceiving speech begins at the level of the sound signal and the process of audition. (For a complete description of the process of audition see Hearing.) After processing the initial auditory signal, speech sounds are further processed to extract acoustic cues and phonetic information. This speech information can then be used for higher-level language processes, such as word recognition.

Proprioception

Proprioception (from Latin proprius, meaning "one's own" and perception) is the sense of the relative position of neighbouring parts of the body. Unlike the six exteroceptive senses (sight, taste, smell, touch, hearing, and balance) by which we perceive the outside world, and interoceptive senses, by which we perceive the pain and the stretching of internal organs, proprioception is a third distinct sensory modality that provides feedback solely on the status of the body internally. It is the sense that indicates whether the body is moving with required effort, as well as where the various parts of the body are located in relation to each other.

The Study of Perception

Perception is one of the oldest fields within scientific psychology, and there are correspondingly many theories about its underlying processes. The oldest quantitative law in psychology is the Weber-Fechner law, which quantifies the relationship between the intensity of physical stimuli and their perceptual effects. It was the study of perception that gave rise to the Gestalt school of psychology, with its emphasis on holistic approach.

The word perception comes from the Latin perception-, percepio, , meaning "receiving, collecting, action of taking possession, apprehension with the mind or senses." —OED.com. Methods of studying perception range from essentially biological or physiological approaches, through psychological approaches through the philosophy of mind and in empiricist epistemology, such as that of David Hume, John Locke, George Berkeley, or as in Merleau Ponty's affirmation of perception as the basis of all science and knowledge.

There are two basic understandings of perception: Passive Perception (PP) and Active Perception (PA). The passive perception (conceived by René Descartes) is addressed in this article and could be surmised as the following sequence of events: surrounding - > input (senses) - > processing (brain) - > output (re-action). Although still supported by mainstream philosophers, psychologists and neurologists, this theory is nowadays losing momentum. The theory of active perception has emerged from extensive research of sensory illusions with works of Professor Emeritus Richard L Gregory in a lead. This theory is increasingly gaining experimental support and could be surmised as dynamic relationship between “description” (in the brain) < - > senses < - > surrounding.

The philosophy of perception concerns how mental processes and symbols depend on the world internal and external to the perceiver. The philosophy of perception is very closely related to a branch of philosophy known as epistemology, the theory of knowledge. While René Descartes concluded that the question "Do I exist?" can only be answered in the affirmative (cogito ergo sum), Freudian psychology suggests that self-perception is an illusion of the ego, and cannot be trusted to decide what is in fact real. Such questions are continuously reanimated, as each generation grapples with the nature of existence from within the human condition. The questions remain: Do our perceptions allow us to experience the world as it "really is?" Can we ever know another point of view in the way we know our own?

Theories of Perception

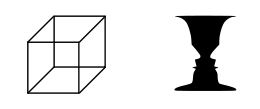

The most common theory of perception is naïve realism in which people believe that what they perceive is things in themselves. Children develop this theory as a working hypothesis of how to deal with the world. Many people who have not studied biology carry this theory into adult life and regard their perception to be the world itself rather than a pattern that overlays the form of the world. Thomas Reid took this theory a step further, he realised that sensation was composed of a set of data transfers but declared that these were in some way transparent so that there is a direct connection between perception and the world. This idea is called direct realism and has become popular in recent years with the rise of postmodernism and behaviourism. Direct realism does not clearly specify the nature of the bit of the world that is an object in perception, especially in cases where the object is something like a silhouette.

The succession of data transfers that are involved in perception suggests that somewhere in the brain there is a final set of activity, called sense data, that is the substrate of the percept. Perception would then be some form of brain activity and somehow the brain would be able to perceive itself. This concept is known as indirect realism. In indirect realism it is held that we can only be aware of external objects by being aware of representations of objects. This idea was held by John Locke and Immanuel Kant. The common argument against indirect realism, used by Gilbert Ryle amongst others, is that it implies a homunculus or Ryle's regress where it appears as if the mind is seeing the mind in an endless loop. This argument assumes that perception is entirely due to data transfer and classical information processing. This assumption is highly contentious (see strong AI) and the argument can be avoided by proposing that the percept is a phenomenon that does not depend wholly upon the transfer and rearrangement of data.

Direct realism and indirect realism are known as realist theories of perception because they hold that there is a world external to the mind. Direct realism holds that the representation of an object is located next to, or is even part of, the actual physical object whereas indirect realism holds that the representation of an object is brain activity. Direct realism proposes some as yet unknown direct connection between external representations and the mind whilst indirect realism requires some feature of modern physics to create a phenomenon that avoids infinite regress. Indirect realism is consistent with experiences such as: binding, dreams, imaginings, hallucinations, illusions, the resolution of binocular rivalry, the resolution of multistable perception, the modelling of motion that allows us to watch TV, the sensations that result from direct brain stimulation, the update of the mental image by saccades of the eyes and the referral of events backwards in time.

There are also anti-realist understanding of perception. The two varieties of anti-realism are: Idealism and Skepticism. Idealism holds that we create our reality whereas skepticism holds that reality is always beyond us. One of the most influential proponents of idealism was George Berkeley who maintained that everything was mind or dependent upon mind. Berkeley's idealism has two main strands, phenomenalism in which physical events are viewed as a special kind of mental event and subjective idealism. David Hume is probably the most influential proponent of skepticism.

Cognitive theories of perception assume there is a poverty of stimulus. This (with reference to perception) is the claim that sensations are, by themselves, unable to provide a unique description of the world. Sensations require enriching, which is the role of the mental model. A different type of theory is the perceptual ecology approach of James J. Gibson. Gibson rejected the assumption of a poverty of stimulus by rejecting the notion that perception is based in sensations. Instead, he investigated what information is actually presented to the perceptual systems. He (and the psychologists who work within this paradigm) detailed how the world could be specified to a mobile, exploring organism via the lawful projection of information about the world into energy arrays. Specification is a 1:1 mapping of some aspect of the world into a perceptual array; given such a mapping, no enrichment is required and perception is direct.

Perception and Reality

Many cognitive psychologists hold that, as we move about in the world, we create a model of how the world works. That is, we sense the objective world, but our sensations map to percepts, and these percepts are provisional, in the same sense that scientific hypotheses are provisional (cf. scientific method). As we acquire new information, our percepts shift, thus solidifying the idea that perception is a matter of belief. The biography of Abraham Pais refers to the esemplastic nature of imagination. In the case of visual perception, some people can actually see the percept shift in their mind's eye. Others who are not picture thinkers, may not necessarily perceive the 'shape-shifting' as their world changes. The esemplastic nature has been shown by experiment: an ambiguous image has multiple interpretations on the perceptual level.

Just as one object can give rise to multiple percepts, so an object may fail to give rise to any percept at all: if the percept has no grounding in a person's experience, the person may literally not perceive it.

This confusing ambiguity of perception is exploited in human technologies such as camouflage, and also in biological mimicry, for example by Peacock butterflies, whose wings bear eye markings that birds respond to as though they were the eyes of a dangerous predator. Perceptual ambiguity is not restricted to vision. For example, recent touch perception research (Robles-De-La-Torre & Hayward 2001) found that kinesthesia-based haptic perception strongly relies on the forces experienced during touch. This makes it possible to produce illusory touch percepts (see also the MIT Technology Review article The Cutting Edge of Haptics).

Cognitive theories of perception assume there is a poverty of stimulus. This (with reference to perception) is the claim that sensations are, by themselves, unable to provide a unique description of the world. Sensations require 'enriching', which is the role of the mental model. A different type of theory is the perceptual ecology approach of James J. Gibson. Gibson rejected the assumption of a poverty of stimulus by rejecting the notion that perception is based in sensations. Instead, he investigated what information is actually presented to the perceptual systems. He (and the psychologists who work within this paradigm) detailed how the world could be specified to a mobile, exploring organism via the lawful projection of information about the world into energy arrays. Specification is a 1:1 mapping of some aspect of the world into a perceptual array; given such a mapping, no enrichment is required and perception is direct.

Perception-in-Action

The ecological understanding of perception advanced from Gibson's early work is perception-in-action, the notion that perception is a requisite property of animate action, without perception action would not be guided and without action perception would be pointless. Animate actions require perceiving and moving together. In a sense, "perception and movement are two sides of the same coin, the coin is action." [15] A mathematical theory of perception-in-action has been devised and investigated in many forms of controlled movement by many different species of organism, General Tau Theory. According to this theory, tau information, or time-to-goal information is the fundamental 'percept' in perception.

We gather information about the world and interact with it through our actions. Perceptual information is critical for action. Perceptual deficits may lead to profound deficits in action (for touch-perception-related deficits.[16]

Perceptual space

One important aspect of perception research is the aspect that is common to both realists and anti-realists is the idea of mental or perceptual space. David Hume considers this at some length and concludes that things appear extended because they have the attributes of colour and solidity. A popular modern philosophical view is that the brain cannot contain images so our sense of space must be due to the actual space occupied by physical things. However, as René Descartes noticed, perceptual space has a projective geometry, things within it appear as if they are viewed from a point and are not simply objects arranged in 3D. Mathematicians now know of many types of projective geometry such as complex Minkowski space that might describe the layout of things in perception (see Peters (2000)). It is also known that many parts of the brain contain patterns of electrical activity that correspond closely to the layout of the retinal image (this is known as retinotopy). There are indeed images in the brain but how or whether these become conscious experience is a mystery (see McGinn (1995)).

Perceptual abilities and the Observer

A person’s sensory and perceptual experiences are affected by race, gender, and age. Unlike puppies and kittens, human babies are born with their eyes open and functioning. Neonates begin to absorb and process information from the outside world as soon as they enter it (in some aspects even before). Even before babies are born, their ears are in working order. Fetuses in the uterus can hear sounds and startle at a sudden, loud noise in the mother’s environment. After birth, babies show signs that they remember sounds they heard in the womb. Babies also are born with the ability to tell the direction of a sound. They show this by turning their heads toward the source of a sound. Infants are particularly tuned in to the sounds of human speech. Their senses work fairly well at birth and rapidly improve to near-adult levels. Besides experience and learning, our perceptions can also be influenced by our motivations, values, ecpectations, cognitive style, and cultural preconceptions.

Perception is referred to as a cognitive process in which information processing is used to transfer information from the world into the brain and mind where it is further processed and related to other information. Some philosophers and psychologists propose that this processing gives rise to particular mental states cognitivism whilst others envisage a direct path back into the external world in the form of action. Many eminent behaviourists such as John B. Watson and B.F.Skinner have proposed that perception acts largely as a process between a stimulus and a response but despite this have noted that Ryle's "ghost in the machine" of the brain still seems to exist. As Skinner wrote: "The objection to inner states is not that they do not exist, but that they are not relevant in a functional analysis" (Science and Human Behavior, 1953. ISBN 0-02-929040-6). This view, in which experience is thought to be an incidental by-product of information processing, is known as epiphenomenalism.

Scientific accounts in understanding perception

The science of perception is concerned with how events are observed and interpreted. An event may be the occurrence of an object at some distance from an observer. According to the scientific account this object will reflect light from the sun in all directions. Some of this reflected light from a particular, unique point on the object will fall all over the corneas of the eyes and the combined cornea/lens system of the eyes will divert the light to two points, one on each retina. The pattern of points of light on each retina forms an image. This process also occurs in the case of silouettes where the pattern of absence of points of light forms an image. The overall effect is to encode position data on a stream of photons and to transfer this encoding onto a pattern on the retinas. The patterns on the retinas are the only optical images found in perception, prior to the retinas, light is arranged as a fog of photons going in all directions.

The images on the two retinas are slightly different and the disparity between the electrical outputs from these is resolved either at the level of the lateral geniculate nucleus or in a part of the visual cortex called 'V1'. The resolved data is further processed in the visual cortex where some areas have relatively more specialised functions, for instance area V5 is involved in the modelling of motion and V4 in adding colour. The resulting single image that subjects report as their experience is called a 'percept'. Studies involving rapidly changing scenes show that the percept derives from numerous processes that each involve time delays (see Moutoussis and Zeki (1997)).

Recent fMRI studies show that dreams, imaginings and perceptions of similar things such as faces are accompanied by activity in many of the same areas of brain. It seems that imagery that originates from the senses and internally generated imagery may have a shared ontology at higher levels of cortical processing.

If an object is also a source of sound this is transmitted as pressure waves that are sensed by the cochlear in the ear. If the observer is blindfolded it is difficult to locate the exact source of sound waves, if the blindfold is removed the sound can usually be located at the source. The data from the eyes and the ears is combined to form a 'bound' percept. The problem of how the bound percept is produced is known as the binding problem and is the subject of considerable study. The binding problem is also a question of how different aspects of a single sense (say, color and contour in vision) are bound to the same object when they are processed by spatially different areas of the brain.

Notes

- ↑ Bradley Steffens (2006). Ibn al-Haytham: First Scientist, Chapter 5. Morgan Reynolds Publishing. ISBN 1599350246.

- ↑ Omar Khaleefa (Summer 1999). "Who Is the Founder of Psychophysics and Experimental Psychology?," American Journal of Islamic Social Sciences 16 (2).

- ↑ Mamassian, Landy & Maloney (2002)

- ↑ A Primer on Probabilistic Approaches to Visual Perception

- ↑ Computational models of contour integration, by Steve Dakin

- ↑ Neitz, Jay & Jacobs, Gerald H. (1986). "Polymorphism of the long-wavelength cone in normal human colour vision." Nature. 323, 623-625.

- ↑ Jacobs, Gerald H. (1996). "Primate photopigments and primate color vision." PNAS. 93 (2), 577–581.

- ↑ Reichardt, W. (1961). Autocorrelation, a principle for the evaluation of sensory information by the central nervous system.. W.A. Rosenblith (Ed.) Sensory communication (: 303-317.

- ↑ Adelson, E.H., & Bergen, J.R. (1985). Spatiotemporal energy models for the perception of motion.. J Opt Soc Am A, 2 (2): 284-299.

- ↑ van Santen, J.P., & Sperling, G. (1985). Elaborated Reichardt detectors.. J Opt Soc Am A, 2 (2): 300-321.

- ↑ Cavanagh, P & Mather, G (1989). Motion: the long and short of it.. Spatial vision 4: 103-129.

- ↑ Chubb, C & Sperling, G (1988). Drift-balanced random stimuli: A general basis for studying non-Fourier motion perception.. J Opt Soc Amer A, 5: 1986-2007.

- ↑ Nishida, S., Ledgeway, T. & Edwards, M. (1997). Dual multiple-scale processing for motion in the human visual system.. Vision Research 37: 2685-2698.

- ↑ Ledgeway, T. & Smith, A.T. (1994). The duration of the motion aftereffect following adaptation to first- and second-order motion.. Perception 23: 1211-1219.

- ↑ D.N. Lee Retrieved August 30, 2007.

- ↑ Robles-De-La-Torre (2006) The Importance of the Sense of Touch in Virtual and Real Environments Retrieved August 30, 2007.

ReferencesISBN links support NWE through referral fees

=Amodal volume completion: 3D visual completion | journal =Computer Vision and Image Understanding | volume =99 | issue = | pages =499 -526 | id = | url =http://www.inf.ed.ac.uk/publications/online/0317.pdf | format =pdf | accessdate =2005 }}

- BonJour L. 2001. "Epistemological Problems of Perception," The Stanford Encyclopedia of Philosophy, Edward Zalta (ed.). Online text

- Burge, T. 1991. "Vision and Intentional Content," in E. LePore and R. Van Gulick (eds.) John Searle and his Critics, Oxford: Blackwell.

- Crane, T. 2005. "The Problem of Perception," The Stanford Encyclopedia of Philosophy, Edward Zalta (ed.). Online text

- Conway, B.R. 2001. "Spatial structure of cone inputs to color cells in alert macaque primary visual cortex (V-1)" Journal of Neuroscience. 21 (8), 2768-2783.

- Descartes, Rene 1641. Meditations on First Philosophy. Online text

- Dretske, Fred 1981. Knowledge and the Flow of Information, Oxford: Blackwell.

- Evans, Gareth 1982. The Varieties of Reference, Oxford: Clarendon Press.

- Flynn, Bernard 2004. "Maurice Merleau-Ponty," The Stanford Encyclopedia of Philosophy, Edward Zalta (ed.). Online text

- Hayward V, Astley OR, Cruz-Hernandez M, Grant D, Robles-De-La-Torre G. Haptic interfaces and devices. Sensor Review 24(1), pp. 16-29 (2004).

- Hume, David 1739-40. A Treatise of Human Nature: Being An Attempt to Introduce the Experimental Method of Reasoning Into Moral Subjects. Online text

- Infoactivity Genesis of perception investigation

Isomorphism and the Quantification of Spatial Perception | journal =Gestalt Theory | volume =21 | issue =2 | pages =122 -139 | id = | url =http://gestalttheory.net/archive/lehar.pdf | format =pdf | accessdate =2005 }}

- James.J.Gibson, The Senses Considered as Perceptual Systems. Boston 1966.

- James J. Gibson. The Ecological Approach to Visual Perception. Lawrence Erlbaum Associates, 1987. ISBN 0898599598

{{cite journal | last =Lehar | first =Steven | authorlink = | coauthors = | year =1999 | month = | title =Gestalt {{cite journal | last =Breckon | first =Toby | authorlink = | coauthors = Fisher, Robert | year =2005 | month = | title *Nolte J. The Human Brain: An Introduction to Its Functional Anatomy. 5th ed. St. Louis: Mosby, Inc.; 2002. ISBN 0-323-01320-1* Rowe, Michael H (2002).

- Kant, Immanuel 1781. Critique of Pure Reason. Norman Kemp Smith (trans.) with preface by Howard Caygill, Palgrave Macmillan. Online text

- Kandel E, Schwartz J, Jessel T. Principles of Neural Science. 4th ed. New York: McGraw-Hill; 2000. ISBN 0-8385-7701-6

- Kitayama, S., Duffy, S., Kawamura, T., & Larsen, J. T. 2003. Perceiving an object and its context in different cultures: A cultural look at New Look. Psychological Science, 14, 201-206.

- Lachman, S. J. 1984. Processes in visual misperception: Illusions for highly structured stimulus material. Paper presented at the 92nd annual convention of the American Psychological Association, Toronto, Canada.

- Lachman, S. J. 1996. Processes in Perception: Psychological transformations of highly structured stimulus material. Perceptual and Motor Skills. 83, 411-418.

- Lacewing, Michael (unpublished). "Phenomenalism." Online PDF

- Locke, John 1689. An Essay Concerning Human Understanding. Online text

- Martin, Paul R (1998). "Colour processing in the primate retina: recent progress." Journal of Physiology. 513 (3), 631-638.

- McCann, M., ed. 1993. Edwin H. Land's Essays. Springfield, Va.: Society for Imaging Science and Technology.

- McClelland, D. C., and Atkinson, J. W. 1948. The projective expression of needs: The effect of different intensities of the hunger drive on perception. Journal of Psychology, 25, 205-222.

- McCreery, Charles (2006). "Perception and Hallucination: the Case for Continuity." Philosophical Paper No. 2006-1.

- Merleau Ponty, Maurice Phenomenology of Perception, Routledge London, 1995.

- Michael H. Rowe "Trichromatic color vision in primates." News in Physiological Sciences. 17 (3), 93-98.

Oxford: Oxford Forum. Online PDF

- McDowell, John, (1982). "Criteria, Defeasibility, and Knowledge," Proceedings of the British Academy, pp. 455–79.

- McDowell, John, (1994). Mind and World, Cambridge, Mass.: Harvard University Press.

- McGinn, Colin (1995). "Consciousness and Space," In Conscious Experience, Thomas Metzinger (ed.), Imprint Academic. Online text

- Mead, George Herbert (1938). "Mediate Factors in Perception," Essay 8 in The Philosophy of the Act, Charles W. Morris with John M. Brewster, Albert M. Dunham and David Miller (eds.), Chicago: University of Chicago, pp. 125-139. Online text

- Moutoussis, K. and Zeki, S. (1997). "A Direct Demonstration of Perceptual Asynchrony in Vision," Proceedings of the Royal Society of London, Series B: Biological Sciences, 264, pp. 393-399.

- Flanagan, J.R., Lederman, S.J. Neurobiology: Feeling bumps and holes, News and Views, Nature, 412(6845):389-91 (2001).

- Byrne, Alex and Hilbert, D.S. (1997). Readings on Color, Volume 2: The Science of Color, 2nd ed., Cambridge, Massachusetts: MIT Press. ISBN 0-262-52231-4.

- Kaiser, Peter K. and Boynton, R.M. (1996). Human Color Vision, 2nd ed., Washington, DC: Optical Society of America. ISBN 1-55752-461-0.

- Wyszecki, Günther and Stiles, W.S. (2000). Color Science: Concepts and Methods, Quantitative Data and Formulae, 2nd edition, New York: Wiley-Interscience. ISBN 0-471-39918-3.

- McIntyre, Donald (2002). Colour Blindness: Causes and Effects. UK: Dalton Publishing. ISBN 0-9541886-0-8.

- Shevell, Steven K. (2003). The Science of Color, 2nd ed., Oxford, UK: Optical Society of America. ISBN 0-444-512-519.

- Palmer, S. E. (1999) Vision science: Photons to phenomenology. Cambridge, MA: Bradford Books/MIT Press.

- Pinker, S. (1997). The Mind’s Eye. In How the Mind Works (pp. 211–233) ISBN 0-393-31848-6

- Purves D, Lotto B (2003) Why We See What We Do: An Empirical Theory of Vision. Sunderland, MA: Sinauer Associates.

- Scott B. Steinman, Barbara A. Steinman and Ralph Philip Garzia. (2000). Foundations of Binocular Vision: A Clinical perspective. McGraw-Hill Medical. ISBN 0-8385-2670-5

- Peacocke, Christopher (1983). Sense and Content, Oxford: Oxford University Press.

- Peters, G. (2000). "Theories of Three-Dimensional Object Perception - A Survey," Recent Research Developments in Pattern Recognition, Transworld Research Network. Online text

- Putnam, Hilary (1999). The Threefold Cord, New York: Columbia University Press.

- Read, Czerne (unpublished). "Dreaming in Color." Online text

- Russell, Bertrand (1912). The Problems of Philosophy, London: Williams and Norgate; New York: Henry Holt and Company. Online text

- Skinner, B.F. Science and Human Behavior, 1953. ISBN 0-02-929040-6

- Shoemaker, Sydney (1990). "Qualities and Qualia: What's in the Mind?" Philosophy and Phenomenological Research 50, Supplement, pp. 109–31.

- Siegel, Susanna (2005). "The Contents of Perception," The Stanford Encyclopedia of Philosophy, Edward Zalta (ed.). Online text

- Tong, Frank (2003). "Primary Visual Cortex and Visual Awareness," Nature Reviews, Neuroscience, Vol 4, 219. Online text

- Robles-De-La-Torre G. & Hayward V. Force Can Overcome Object Geometry In the perception of Shape Through Active Touch. Nature 412 (6845):445-8 (2001).

- Robles-De-La-Torre G. The Importance of the Sense of Touch in Virtual and Real Environments. IEEE

- Turnbull, C. M. 1961. Observations. American Journal of Psychology, 1, 304-308.

- Tye, Michael (2000). Consciousness, Color and Content, Cambridge, MA: MIT Press.

- Verrelli, BC; Tishkoff, S (2004). "Color vision molecular variation." American Journal of Human Genetics. 75 (3), 363-375.

- Wandell, B. "Foundations of Vision". Sinauer Press, MA.

- Witkin, et al. 1962. Psychological Differentiation. New York: Wiley.

Multimedia 13(3), Special issue on Haptic User Interfaces for Multimedia Systems, pp. 24-30 (2006).

External links

- Dale Purves Lab [1]

- Depth perception and sensory substitution |[2]

- Online papers on perception, by various authors, compiled by David Chalmers

- Paradoxical haptic objects. An example of touch illusions of shape. See also the MIT Technology Review article:

- The Cutting Edge of Haptics, by Duncan Graham-Rowe.

- Theories of Perception

- Richard L Gregory

- "Evidence that men, women literally see the world differently: Study shows color vision may have been adaptive during evolution."

- Spectral Sensitivity of the Eye.

- Vision may not be what we thought.

- Overview of color vision.

- The decoding model: a symmetrical model of color vision.

- Working examples of Chromatic Adaptation.

- Dale Purves Lab[3]

- Egopont color vision test

- Empiristic theory of visual gestalt perception

- Visual Perception 3 - Cultural and Environmental Factors

- Gestalt Laws

- Summary of Kosslyn et al.'s theory of high-level vision

- The Organization of the Retina and Visual System

- Dr Trippy's Sensorium A website dedicated to the study of the human sensorium and organisational behaviour

- University of Nottingham Visual Neuroscience

- McGill Vision Research

- Purves Lab

- Center for Vision Research

- iLab at the University of Southern California

Credits

New World Encyclopedia writers and editors rewrote and completed the Wikipedia article in accordance with New World Encyclopedia standards. This article abides by terms of the Creative Commons CC-by-sa 3.0 License (CC-by-sa), which may be used and disseminated with proper attribution. Credit is due under the terms of this license that can reference both the New World Encyclopedia contributors and the selfless volunteer contributors of the Wikimedia Foundation. To cite this article click here for a list of acceptable citing formats.The history of earlier contributions by wikipedians is accessible to researchers here:

- Perception history

- Amodal_perception history

- Color_vision history

- Visual_perception history

- Depth_perception history

- Philosophy_of_perception history

- Motion_perception history

The history of this article since it was imported to New World Encyclopedia:

Note: Some restrictions may apply to use of individual images which are separately licensed.