Artificial intelligence

Artificial intelligence (AI) is a branch of computer science and engineering that deals with intelligent behavior, learning, and adaptation in machines. John McCarthy coined the term to mean "the science and engineering of making intelligent machines."[1] Research in AI is concerned with producing machines to automate tasks requiring intelligent behavior. Examples include control systems; automated planning and scheduling; the ability to answer diagnostic and consumer questions; and handwriting, speech, and facial recognition. As such, it has become an engineering discipline, focused on providing solutions to real-life problems, software applications, traditional strategy games like computer chess, and various video games.

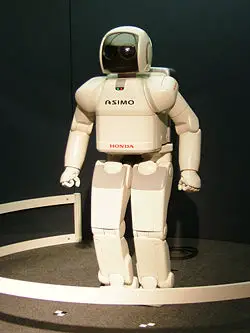

Artificial intelligence is being used today for many different purposes and all throughout the world. It can create safer environments for workers by using robots for dangerous situations. In the future, it may be used more for human interaction; for example, an automated teller would actually be able to do visual recognition and respond to one personally.

Schools of thought

AI divides roughly into two schools of thought: Conventional AI and Computational Intelligence (CI), also sometimes referred to as Synthetic Intelligence.

Conventional AI mostly involves methods now classified as machine learning, characterized by formalism and statistical analysis. This is also known as symbolic AI, logical AI, or neat AI. Methods include:

- Expert systems: applies reasoning capabilities to reach a conclusion. An expert system can process large amounts of known information and provide conclusions based on them.

- Case-based reasoning is the process of solving new problems based on the solutions of similar past problems.

- Bayesian networks represents a set of variables together with a joint probability distribution with explicit independence assumptions.

- Behavior-based AI: a modular method of building AI systems by hand.

Computational Intelligence involves iterative development or learning. Learning is based on empirical data. It is also known as non-symbolic AI, scruffy AI, and soft computing. Methods mainly include:

- Neural networks: systems with very strong pattern recognition capabilities.

- Fuzzy systems: techniques for reasoning under uncertainty, have been widely used in modern industrial and consumer product control systems.

- Evolutionary computation: applies biologically inspired concepts such as populations, mutation, and survival of the fittest to generate increasingly better solutions to the problem. These methods most notably divide into evolutionary algorithms and swarm intelligence.

Hybrid intelligent systems attempt to combine these two groups. It is thought that the human brain uses multiple techniques to both formulate and cross-check results. Thus, systems integration is seen as promising and perhaps necessary for true AI.

History

Early in the seventeenth century, René Descartes envisioned the bodies of animals as complex but reducible machines, thus formulating the mechanistic theory, also known as the "clockwork paradigm." Wilhelm Schickard created the first mechanical, digital calculating machine in 1623, followed by machines of Blaise Pascal (1643) and Gottfried Wilhelm von Leibniz (1671), who also invented the binary system. In the nineteenth century, Charles Babbage and Ada Lovelace worked on programmable mechanical calculating machines.

Bertrand Russell and Alfred North Whitehead published Principia Mathematica in 1910-1913, which revolutionized formal logic. In 1931 Kurt Gödel showed that sufficiently powerful consistent formal systems contain true theorems not provable by any theorem-proving AI that is systematically deriving all possible theorems from the axioms. In 1941 Konrad Zuse built the first working program-controlled computers. Warren McCulloch and Walter Pitts published A Logical Calculus of the Ideas Immanent in Nervous Activity (1943), laying the foundations for neural networks. Norbert Wiener's Cybernetics or Control and Communication in the Animal and the Machine, (1948) popularizes the term "cybernetics."

1950s

The 1950s were a period of active efforts in AI. In 1950, Alan Turing introduced the "Turing test," a test of intelligent behavior. The first working AI programs were written in 1951 to run on the Ferranti Mark I machine of the University of Manchester: a draughts-playing program written by Christopher Strachey and a chess-playing program written by Dietrich Prinz. John McCarthy coined the term "artificial intelligence" at the first conference devoted to the subject, in 1956. He also invented the Lisp programming language. Joseph Weizenbaum built ELIZA, a chatterbot implementing Rogerian psychotherapy. The birth date of AI is generally considered to be July 1956 at the Dartmouth Conference, where many of these people met and exchanged ideas.

At the same time, John von Neumann, who had been hired by the RAND Corporation, developed the game theory, which would prove invaluable in the progress of AI research.

1960s–1970s

During the 1960s and 1970s, Joel Moses demonstrated the power of symbolic reasoning for integration problems in the Macsyma program, the first successful knowledge-based program in mathematics. Leonard Uhr and Charles Vossler published "A Pattern Recognition Program That Generates, Evaluates, and Adjusts Its Own Operators" in 1963, which described one of the first machine learning programs that could adaptively acquire and modify features. Marvin Minsky and Seymour Papert published Perceptrons, which demonstrated the limits of simple neural nets. Alain Colmerauer developed the Prolog computer language. Ted Shortliffe demonstrated the power of rule-based systems for knowledge representation and inference in medical diagnosis and therapy in what is sometimes called the first expert system. Hans Moravec developed the first computer-controlled vehicle to autonomously negotiate cluttered obstacle courses.

1980s

In the 1980s, neural networks became widely used due to the back propagation algorithm, first described by Paul Werbos in 1974. The team of Ernst Dickmanns built the first robot cars, driving up to 55 mph on empty streets.

1990s and the turn of the century

The 1990s marked major achievements in many areas of AI and demonstrations of various applications. In 1995, one of Dickmanns' robot cars drove more than 1000 miles in traffic at up to 110 mph. Deep Blue, a chess-playing computer, beat Garry Kasparov in a famous six-game match in 1997. The Defense Advanced Research Projects Agency stated that the costs saved by implementing AI methods for scheduling units in the first Persian Gulf War have repaid the US government's entire investment in AI research since the 1950s. Honda built the first prototypes of humanoid robots like the one depicted above.

During the 1990s and 2000s AI became very influenced by probability theory and statistics. Bayesian networks are the focus of this movement, providing links to more rigorous topics in statistics and engineering such as Markov models and Kalman filters, and bridging the divide between neat and scruffy approaches. After the September 11, 2001 attacks there has been much renewed interest and funding for threat-detection AI systems, including machine vision research and data-mining. However despite the hype, excitement about Bayesian AI is perhaps now fading again as successful Bayesian models have only appeared for tiny statistical tasks (such as finding principal components probabilistically) and appear to be intractable for general perception and decision-making.

The 2010s

Advanced statistical techniques (loosely known as deep learning), access to large amounts of data and faster computers enabled advances in machine learning and perception. By the mid 2010s, machine learning applications were used throughout the world.

In a Jeopardy! quiz show exhibition match, IBM's question answering system, Watson, defeated the two greatest Jeopardy champions, Brad Rutter and Ken Jennings, by a significant margin.[2] The Kinect, which provides a 3D body–motion interface for the Xbox 360 and the Xbox One use algorithms that emerged from lengthy AI research,[3] as do intelligent personal assistants in smartphones.[4]

In March 2016, AlphaGo won 4 out of 5 games of Go in a match with Go champion Lee Sedol, becoming the first computer Go-playing system to beat a professional Go player without handicaps.[5] Other examples include Microsoft's development of a Skype system that can automatically translate from one language to another and Facebook's system that can describe images to blind people.

AI in Philosophy

The strong AI vs. weak AI debate is a hot topic amongst AI philosophers. This involves the philosophy of the mind and the mind-body problem. Most notably Roger Penrose in his book The Emperor's New Mind and John Searle with his "Chinese room" thought experiment argue that true consciousness cannot be achieved by formal logic systems, while Douglas Hofstadter in Gödel, Escher, Bach and Daniel Dennett in Consciousness Explained argue in favor of functionalism, which argues that mental states (beliefs, desires, being in pain, etc.) are constituted solely by their functional role. In many strong AI supporters’ opinion, artificial consciousness is considered as the holy grail of artificial intelligence. Edsger Dijkstra famously opined that the debate had little importance: "The question of whether a computer can think is no more interesting than the question of whether a submarine can swim."

Epistemology, the study of knowledge, also makes contact with AI, as engineers find themselves debating similar questions to philosophers about how best to represent and use knowledge and information.

AI in business

Banks use artificial intelligence systems to organize operations, invest in stocks, and manage properties. In August 2001, robots beat humans in a simulated financial trading competition [6] A medical clinic can use artificial intelligence systems to organize bed schedules, make a staff rotation, and to provide medical information. Many practical applications are dependent on artificial neural networks—networks that pattern their organization in mimicry of a brain's neurons, which have been found to excel in pattern recognition. Financial institutions have long used such systems to detect charges or claims outside of the norm, flagging these for human investigation. Neural networks are also being widely deployed in homeland security, speech and text recognition, medical diagnosis, data mining, and e-mail spam filtering.

Robots have also become common in many industries. They are often given jobs that are considered dangerous to humans. Robots have proven effective in jobs that are very repetitive, which may lead to mistakes or accidents due to a lapse in concentration, and other jobs which humans may find degrading. General Motors uses around 16,000 robots for tasks such as painting, welding, and assembly. Japan is the leader in using robots in the world.

Areas of AI Implementation

- Artificial Creativity

- Artificial life

- Automated reasoning

- Automation

- Behavior-based robotics

- Bio-inspired computing

- Cognitive robotics

- Concept Mining

- Cybernetics

- Data mining

- Developmental robotics

- Epigenetic robotics

- E-mail spam filtering

- Game theory and Strategic planning

- Hybrid intelligent system

- Intelligent agent

- Intelligent control

- Knowledge Representation

- Knowledge Acquisition

- Natural language processing, Translation, and Chatterbots

- Non-linear control

- Pattern recognition

- Optical character recognition

- Handwriting recognition

- Speech recognition

- Facial recognition

- Semantic web

- Virtual reality and Image processing

Notes

- ↑ John McCarthy, What is Artificial Intelligence? Retrieved February 22, 2020.

- ↑ John Markoff, Computer Wins on ‘Jeopardy!’: Trivial, It’s Not The New York Times, February 16, 2011. Retrieved February 22, 2020.

- ↑ Harry Fairhead, Kinect's AI breakthrough explained i-programmer.info, March 26, 2011. Retrieved February 22, 2020.

- ↑ Dan Rowinski, Virtual Personal Assistants & The Future Of Your Smartphone Readwrite, January 15, 2013. Retrieved February 22, 2020.

- ↑ Artificial intelligence: Google's AlphaGo beats Go master Lee Se-dol BBCNews, March 12, 2016. Retrieved February 22, 2020.

- ↑ Robots beat humans in trading battle BBCNews, August 8, 2001. Retrieved February 22, 2020.

ReferencesISBN links support NWE through referral fees

- Haag, Stephen, Maeve Cummings, and Donald J. McCubbrey. Management Information Systems for the Information Age 5th ed. New York: McGraw-Hill, 2004. ISBN 0073023884

- Craig, John J. Introduction to Robotics: Mechanics and Control. Upper Saddle River, NJ: Pearson Prentice Hall, 2003. ISBN 0201543613

- Fox, John and Das Subrata. Safe and Sound: Artificial Intelligence in Hazardous Applications. Menlo Park, California: AAAI Press, 2000. ISBN 0262062119

External links

All links retrieved August 16, 2023.

- John McCarthy's frequently asked questions about AI

- Jürgen Schmidhuber Artificial Intelligence History Highlights and Outlook

- Richard Wray, Google Users Promised Artificial Intelligence

- Intelligent Systems Division National Aeronautics and Space Administration (NASA).

- AI Statistics About Smarter Machines TechJury.

- Astounding Artificial Intelligence Statistics for 2020

Credits

New World Encyclopedia writers and editors rewrote and completed the Wikipedia article in accordance with New World Encyclopedia standards. This article abides by terms of the Creative Commons CC-by-sa 3.0 License (CC-by-sa), which may be used and disseminated with proper attribution. Credit is due under the terms of this license that can reference both the New World Encyclopedia contributors and the selfless volunteer contributors of the Wikimedia Foundation. To cite this article click here for a list of acceptable citing formats.The history of earlier contributions by wikipedians is accessible to researchers here:

The history of this article since it was imported to New World Encyclopedia:

Note: Some restrictions may apply to use of individual images which are separately licensed.